---

title: Training Models

next: /usage/projects

menu:

- ['Introduction', 'basics']

- ['CLI & Config', 'cli-config']

- ['Custom Models', 'custom-models']

- ['Transfer Learning', 'transfer-learning']

- ['Parallel Training', 'parallel-training']

- ['Internal API', 'api']

---

## Introduction to training models {#basics}

import Training101 from 'usage/101/\_training.md'

[](https://prodi.gy)

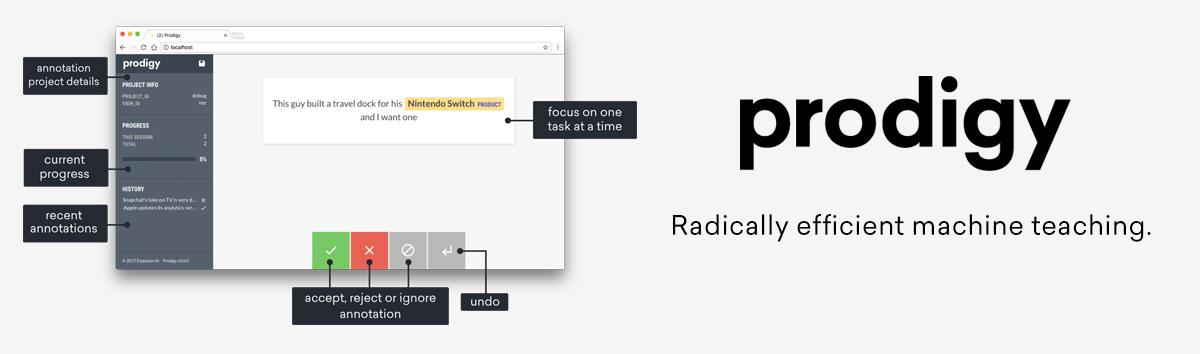

If you need to label a lot of data, check out [Prodigy](https://prodi.gy), a

new, active learning-powered annotation tool we've developed. Prodigy is fast

and extensible, and comes with a modern **web application** that helps you

collect training data faster. It integrates seamlessly with spaCy, pre-selects

the **most relevant examples** for annotation, and lets you train and evaluate

ready-to-use spaCy models.

## Training CLI & config {#cli-config}

The recommended way to train your spaCy models is via the

[`spacy train`](/api/cli#train) command on the command line.

1. The **training data** in spaCy's binary format created using

[`spacy convert`](/api/cli#convert).

2. A `config.cfg` **configuration file** with all settings and hyperparameters.

3. An optional **Python file** to register

[custom models and architectures](#custom-models).

### Training data format {#data-format}

> #### Tip: Debug your data

>

> The [`debug-data` command](/api/cli#debug-data) lets you analyze and validate

> your training and development data, get useful stats, and find problems like

> invalid entity annotations, cyclic dependencies, low data labels and more.

>

> ```bash

> $ python -m spacy debug-data en train.json dev.json --verbose

> ```

When you train a model using the [`spacy train`](/api/cli#train) command, you'll

see a table showing metrics after each pass over the data. Here's what those

metrics means:

| Name | Description |

| ---------- | ------------------------------------------------------------------------------------------------- |

| `Dep Loss` | Training loss for dependency parser. Should decrease, but usually not to 0. |

| `NER Loss` | Training loss for named entity recognizer. Should decrease, but usually not to 0. |

| `UAS` | Unlabeled attachment score for parser. The percentage of unlabeled correct arcs. Should increase. |

| `NER P.` | NER precision on development data. Should increase. |

| `NER R.` | NER recall on development data. Should increase. |

| `NER F.` | NER F-score on development data. Should increase. |

| `Tag %` | Fine-grained part-of-speech tag accuracy on development data. Should increase. |

| `Token %` | Tokenization accuracy on development data. |

| `CPU WPS` | Prediction speed on CPU in words per second, if available. Should stay stable. |

| `GPU WPS` | Prediction speed on GPU in words per second, if available. Should stay stable. |

Note that if the development data has raw text, some of the gold-standard

entities might not align to the predicted tokenization. These tokenization

errors are **excluded from the NER evaluation**. If your tokenization makes it

impossible for the model to predict 50% of your entities, your NER F-score might

still look good.

---

### Training config files {#cli}

```ini

[training]

use_gpu = -1

limit = 0

dropout = 0.2

patience = 1000

eval_frequency = 20

scores = ["ents_p", "ents_r", "ents_f"]

score_weights = {"ents_f": 1}

orth_variant_level = 0.0

gold_preproc = false

max_length = 0

seed = 0

accumulate_gradient = 1

discard_oversize = false

[training.batch_size]

@schedules = "compounding.v1"

start = 100

stop = 1000

compound = 1.001

[training.optimizer]

@optimizers = "Adam.v1"

learn_rate = 0.001

beta1 = 0.9

beta2 = 0.999

use_averages = false

[nlp]

lang = "en"

vectors = null

[nlp.pipeline.ner]

factory = "ner"

[nlp.pipeline.ner.model]

@architectures = "spacy.TransitionBasedParser.v1"

nr_feature_tokens = 3

hidden_width = 128

maxout_pieces = 3

use_upper = true

[nlp.pipeline.ner.model.tok2vec]

@architectures = "spacy.HashEmbedCNN.v1"

width = 128

depth = 4

embed_size = 7000

maxout_pieces = 3

window_size = 1

subword_features = true

pretrained_vectors = null

dropout = null

```

### Model architectures {#model-architectures}

## Custom model implementations and architectures {#custom-models}

### Training with custom code

## Transfer learning {#transfer-learning}

### Using transformer models like BERT {#transformers}

### Pretraining with spaCy {#pretraining}

## Parallel Training with Ray {#parallel-training}

## Internal training API {#api}

The [`GoldParse`](/api/goldparse) object collects the annotated training

examples, also called the **gold standard**. It's initialized with the

[`Doc`](/api/doc) object it refers to, and keyword arguments specifying the

annotations, like `tags` or `entities`. Its job is to encode the annotations,

keep them aligned and create the C-level data structures required for efficient

access. Here's an example of a simple `GoldParse` for part-of-speech tags:

```python

vocab = Vocab(tag_map={"N": {"pos": "NOUN"}, "V": {"pos": "VERB"}})

doc = Doc(vocab, words=["I", "like", "stuff"])

gold = GoldParse(doc, tags=["N", "V", "N"])

```

Using the `Doc` and its gold-standard annotations, the model can be updated to

learn a sentence of three words with their assigned part-of-speech tags. The

[tag map](/usage/adding-languages#tag-map) is part of the vocabulary and defines

the annotation scheme. If you're training a new language model, this will let

you map the tags present in the treebank you train on to spaCy's tag scheme.

```python

doc = Doc(Vocab(), words=["Facebook", "released", "React", "in", "2014"])

gold = GoldParse(doc, entities=["U-ORG", "O", "U-TECHNOLOGY", "O", "U-DATE"])

```

The same goes for named entities. The letters added before the labels refer to

the tags of the [BILUO scheme](/usage/linguistic-features#updating-biluo) – `O`

is a token outside an entity, `U` an single entity unit, `B` the beginning of an

entity, `I` a token inside an entity and `L` the last token of an entity.

> - **Training data**: The training examples.

> - **Text and label**: The current example.

> - **Doc**: A `Doc` object created from the example text.

> - **GoldParse**: A `GoldParse` object of the `Doc` and label.

> - **nlp**: The `nlp` object with the model.

> - **Optimizer**: A function that holds state between updates.

> - **Update**: Update the model's weights.

Of course, it's not enough to only show a model a single example once.

Especially if you only have few examples, you'll want to train for a **number of

iterations**. At each iteration, the training data is **shuffled** to ensure the

model doesn't make any generalizations based on the order of examples. Another

technique to improve the learning results is to set a **dropout rate**, a rate

at which to randomly "drop" individual features and representations. This makes

it harder for the model to memorize the training data. For example, a `0.25`

dropout means that each feature or internal representation has a 1/4 likelihood

of being dropped.

> - [`begin_training`](/api/language#begin_training): Start the training and

> return an optimizer function to update the model's weights. Can take an

> optional function converting the training data to spaCy's training format.

> - [`update`](/api/language#update): Update the model with the training example

> and gold data.

> - [`to_disk`](/api/language#to_disk): Save the updated model to a directory.

```python

### Example training loop

optimizer = nlp.begin_training(get_data)

for itn in range(100):

random.shuffle(train_data)

for raw_text, entity_offsets in train_data:

doc = nlp.make_doc(raw_text)

gold = GoldParse(doc, entities=entity_offsets)

nlp.update([doc], [gold], drop=0.5, sgd=optimizer)

nlp.to_disk("/model")

```

The [`nlp.update`](/api/language#update) method takes the following arguments:

| Name | Description |

| ------- | ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

| `docs` | [`Doc`](/api/doc) objects. The `update` method takes a sequence of them, so you can batch up your training examples. Alternatively, you can also pass in a sequence of raw texts. |

| `golds` | [`GoldParse`](/api/goldparse) objects. The `update` method takes a sequence of them, so you can batch up your training examples. Alternatively, you can also pass in a dictionary containing the annotations. |

| `drop` | Dropout rate. Makes it harder for the model to just memorize the data. |

| `sgd` | An optimizer, i.e. a callable to update the model's weights. If not set, spaCy will create a new one and save it for further use. |

Instead of writing your own training loop, you can also use the built-in

[`train`](/api/cli#train) command, which expects data in spaCy's

[JSON format](/api/annotation#json-input). On each epoch, a model will be saved

out to the directory.