14 KiB

| title | teaser | menu | ||||||

|---|---|---|---|---|---|---|---|---|

| What's New in v3.1 | New features and how to upgrade |

|

New Features

It's been great to see the adoption of the new spaCy v3, which introduced

transformer-based pipelines, a new

config and training system for reproducible experiments,

projects for end-to-end workflows, and many

other features. Version 3.1 adds more on top of it, including the

ability to use predicted annotations during training, a new SpanCategorizer

component for predicting arbitrary and potentially overlapping spans, support

for partial incorrect annotations in the entity recognizer, new trained

pipelines for Catalan and Danish, as well as many bug fixes and improvements.

Using predicted annotations during training

By default, components are updated in isolation during training, which means

that they don't see the predictions of any earlier components in the pipeline.

The new

[training.annotating_components]

config setting lets you specify pipeline components that should set annotations

on the predicted docs during training. This makes it easy to use the predictions

of a previous component in the pipeline as features for a subsequent component,

e.g. the dependency labels in the tagger:

### config.cfg (excerpt) {highlight="7,12"}

[nlp]

pipeline = ["parser", "tagger"]

[components.tagger.model.tok2vec.embed]

@architectures = "spacy.MultiHashEmbed.v1"

width = ${components.tagger.model.tok2vec.encode.width}

attrs = ["NORM","DEP"]

rows = [5000,2500]

include_static_vectors = false

[training]

annotating_components = ["parser"]

This project shows how to use the token.dep attribute predicted by the parser

as a feature for a subsequent tagger component in the pipeline.

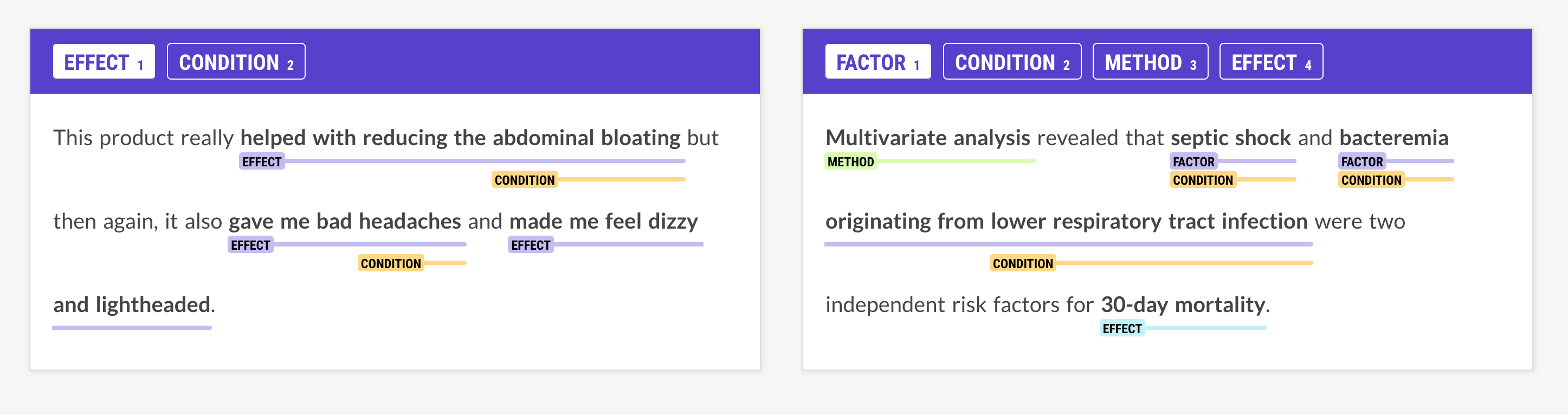

SpanCategorizer for predicting arbitrary and overlapping spans

A common task in applied NLP is extracting spans of texts from documents,

including longer phrases or nested expressions. Named entity recognition isn't

the right tool for this problem, since an entity recognizer typically predicts

single token-based tags that are very sensitive to boundaries. This is effective

for proper nouns and self-contained expressions, but less useful for other types

of phrases or overlapping spans. The new

SpanCategorizer component and

SpanCategorizer architecture let you label

arbitrary and potentially overlapping spans of texts. A span categorizer

consists of two parts: a suggester function

that proposes candidate spans, which may or may not overlap, and a labeler model

that predicts zero or more labels for each candidate. The predicted spans are

available via the Doc.spans container.

This project trains a span categorizer for Indonesian NER.

The upcoming version of our annotation tool Prodigy

(currently available as a pre-release for all

users) features a new workflow and UI for

annotating overlapping and nested spans. You can use it to create training data

for spaCy's SpanCategorizer component.

Update the entity recognizer with partial incorrect annotations

config.cfg (excerpt)

[components.ner] factory = "ner" incorrect_spans_key = "incorrect_spans" moves = null update_with_oracle_cut_size = 100

The EntityRecognizer can now be updated with known

incorrect annotations, which lets you take advantage of partial and sparse data.

For example, you'll be able to use the information that certain spans of text

are definitely not PERSON entities, without having to provide the complete

gold-standard annotations for the given example. The incorrect span annotations

can be added via the Doc.spans in the training data under

the key defined as incorrect_spans_key in the

component config.

train_doc = nlp.make_doc("Barack Obama was born in Hawaii.")

# The doc.spans key can be defined in the config

train_doc.spans["incorrect_spans"] = [

Span(doc, 0, 2, label="ORG"),

Span(doc, 5, 6, label="PRODUCT")

]

New pipeline packages for Catalan and Danish

spaCy v3.1 adds 5 new pipeline packages, including a new core family for Catalan

and a new transformer-based pipeline for Danish using the

danish-bert-botxo weights.

See the models directory for an overview of all available trained

pipelines and the training guide for details on how to train

your own.

Thanks to Carlos Rodríguez Penagos and the Barcelona Supercomputing Center for their contributions for Catalan and to Kenneth Enevoldsen for Danish. For additional Danish pipelines, check out DaCy.

| Package | Language | UPOS | Parser LAS | NER F |

|---|---|---|---|---|

ca_core_news_sm |

Catalan | 98.2 | 87.4 | 79.8 |

ca_core_news_md |

Catalan | 98.3 | 88.2 | 84.0 |

ca_core_news_lg |

Catalan | 98.5 | 88.4 | 84.2 |

ca_core_news_trf |

Catalan | 98.9 | 93.0 | 91.2 |

da_core_news_trf |

Danish | 98.0 | 85.0 | 82.9 |

Resizable text classification architectures

Previously, the TextCategorizer architectures could

not be resized, meaning that you couldn't add new labels to an already trained

model. In spaCy v3.1, the TextCatCNN and

TextCatBOW architectures are now resizable,

while ensuring that the predictions for the old labels remain the same.

CLI command to assemble pipeline from config

The spacy assemble command lets you assemble a pipeline

from a config file without additional training. It can be especially useful for

creating a blank pipeline with a custom tokenizer, rule-based components or word

vectors.

$ python -m spacy assemble config.cfg ./output

Pretty pipeline package READMEs

The spacy package command now auto-generates a pretty

README.md based on the pipeline information defined in the meta.json. This

includes a table with a general overview, as well as the label scheme and

accuracy figures, if available. For an example, see the

model releases.

Support for streaming large or infinite corpora

config.cfg (excerpt)

[training] max_epochs = -1

The training process now supports streaming large or infinite corpora

out-of-the-box, which can be controlled via the

[training.max_epochs] config setting. Setting it

to -1 means that the train corpus should be streamed rather than loaded into

memory with no shuffling within the training loop. For details on how to

implement a custom corpus loader, e.g. to stream in data from a remote storage,

see the usage guide on

custom data reading.

When streaming a corpus, only the first 100 examples will be used for

initialization. This is no problem if you're

training a component like the text classifier with data that specifies all

available labels in every example. If necessary, you can use the

init labels command to pre-generate the labels for

your components using a representative sample so the model can be initialized

correctly before training.

New lemmatizers for Catalan and Italian

The trained pipelines for Catalan and Italian now

include lemmatizers that use the predicted part-of-speech tags as part of the

lookup lemmatization for higher lemmatization accuracy. If you're training your

own pipelines for these languages and you want to include a lemmatizer, make

sure you have the

spacy-lookups-data package

installed, which provides the relevant tables.

Upload your pipelines to the Hugging Face Hub

The Hugging Face Hub lets you upload models and share

them with others, and it now supports spaCy pipelines out-of-the-box. The new

spacy-huggingface-hub

package automatically adds the huggingface-hub command to your spacy CLI. It

lets you upload any pipelines packaged with spacy package

and --build wheel and takes care of auto-generating all required meta

information.

After uploading, you'll get a live URL for your model page that includes all

details, files and interactive visualizers, as well as a direct URL to the wheel

file that you can install via pip install. For examples, check out the

spaCy pipelines we've uploaded.

$ pip install spacy-huggingface-hub

$ huggingface-cli login

$ python -m spacy package ./en_ner_fashion ./output --build wheel

$ cd ./output/en_ner_fashion-0.0.0/dist

$ python -m spacy huggingface-hub push en_ner_fashion-0.0.0-py3-none-any.whl

You can also integrate the upload command into your project template to automatically upload your packaged pipelines after training.

Get started with uploading your models to the Hugging Face hub using our project template. It trains a simple pipeline, packages it and uploads it if the packaged model has changed. This makes it easy to deploy your models end-to-end.

Notes about upgrading from v3.0

Pipeline package version compatibility

Using legacy implementations

In spaCy v3, you'll still be able to load and reference legacy implementations via

spacy-legacy, even if the components or architectures change and newer versions are available in the core library.

When you're loading a pipeline package trained with spaCy v3.0, you will see a

warning telling you that the pipeline may be incompatible. This doesn't

necessarily have to be true, but we recommend running your pipelines against

your test suite or evaluation data to make sure there are no unexpected results.

If you're using one of the trained pipelines we provide, you should

run spacy download to update to the latest version. To

see an overview of all installed packages and their compatibility, you can run

spacy validate.

If you've trained your own custom pipeline and you've confirmed that it's still

working as expected, you can update the spaCy version requirements in the

meta.json:

- "spacy_version": ">=3.0.0,<3.1.0",

+ "spacy_version": ">=3.0.0,<3.2.0",

Updating v3.0 configs

To update a config from spaCy v3.0 with the new v3.1 settings, run

init fill-config:

python -m spacy init fill-config config-v3.0.cfg config-v3.1.cfg

In many cases (spacy train, spacy.load()), the new defaults will be filled

in automatically, but you'll need to fill in the new settings to run

debug config and debug data.

Sourcing pipeline components with vectors

If you're sourcing a pipeline component that requires static vectors (for

example, a tagger or parser from an md or lg pretrained pipeline), be sure

to include the source model's vectors in the setting [initialize.vectors]. In

spaCy v3.0, a bug allowed vectors to be loaded implicitly through source,

however in v3.1 this setting must be provided explicitly as

[initialize.vectors]:

### config.cfg (excerpt)

[components.ner]

source = "en_core_web_md"

[initialize]

vectors = "en_core_web_md"

Each pipeline can only store one set of static vectors, so it's not possible to assemble a pipeline with components that were trained on different static vectors.

spacy train and spacy assemble will

provide warnings if the source and target pipelines don't contain the same

vectors. If you are sourcing a rule-based component like an entity ruler or

lemmatizer that does not use the vectors as a model feature, then this warning

can be safely ignored.

Warnings

Logger warnings have been converted to Python warnings. Use

warnings.filterwarnings

or the new helper method spacy.errors.filter_warning(action, error_msg='') to

manage warnings.