74 KiB

| title | teaser | next | menu | ||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Training Pipelines & Models | Train and update components on your own data and integrate custom models | /usage/layers-architectures |

|

Introduction to training

import Training101 from 'usage/101/_training.md'

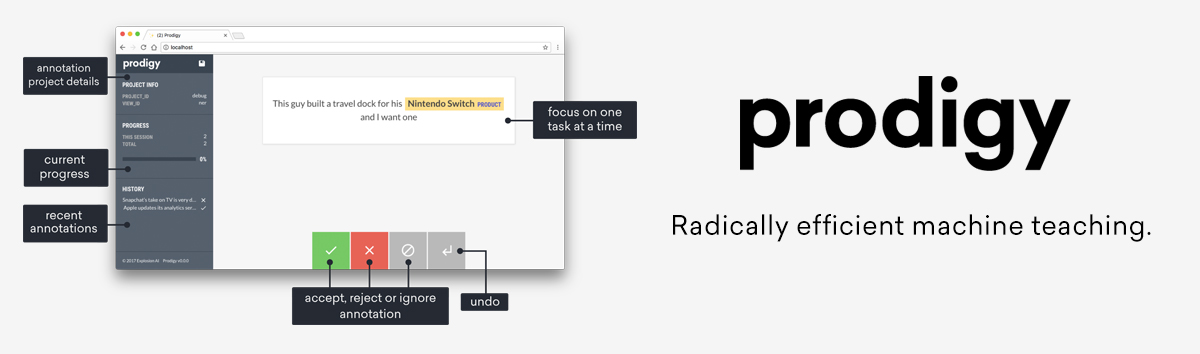

If you need to label a lot of data, check out Prodigy, a new, active learning-powered annotation tool we've developed. Prodigy is fast and extensible, and comes with a modern web application that helps you collect training data faster. It integrates seamlessly with spaCy, pre-selects the most relevant examples for annotation, and lets you train and evaluate ready-to-use spaCy pipelines.

Quickstart

The recommended way to train your spaCy pipelines is via the

spacy train command on the command line. It only needs a

single config.cfg configuration file that includes all settings

and hyperparameters. You can optionally overwrite settings

on the command line, and load in a Python file to register

custom functions and architectures. This quickstart widget helps

you generate a starter config with the recommended settings for your

specific use case. It's also available in spaCy as the

init config command.

Upgrade to the latest version of spaCy to use the quickstart widget. For earlier releases, follow the CLI instructions to generate a compatible config.

Instructions: widget

- Select your requirements and settings.

- Use the buttons at the bottom to save the result to your clipboard or a file

base_config.cfg.- Run

init fill-configto create a full config.- Run

trainwith your config and data.Instructions: CLI

- Run the

init configcommand and specify your requirements and settings as CLI arguments.- Run

trainwith the exported config and data.

import QuickstartTraining from 'widgets/quickstart-training.js'

After you've saved the starter config to a file base_config.cfg, you can use

the init fill-config command to fill in the

remaining defaults. Training configs should always be complete and without

hidden defaults, to keep your experiments reproducible.

$ python -m spacy init fill-config base_config.cfg config.cfg

Tip: Debug your data

The

debug datacommand lets you analyze and validate your training and development data, get useful stats, and find problems like invalid entity annotations, cyclic dependencies, low data labels and more.$ python -m spacy debug data config.cfg

Instead of exporting your starter config from the quickstart widget and

auto-filling it, you can also use the init config

command and specify your requirement and settings as CLI arguments. You can now

add your data and run train with your config. See the

convert command for details on how to convert your data to

spaCy's binary .spacy format. You can either include the data paths in the

[paths] section of your config, or pass them in via the command line.

$ python -m spacy train config.cfg --output ./output --paths.train ./train.spacy --paths.dev ./dev.spacy

Tip: Enable your GPU

Use the

--gpu-idoption to select the GPU:$ python -m spacy train config.cfg --gpu-id 0

The recommended config settings generated by the quickstart widget and the

init config command are based on some general best

practices and things we've found to work well in our experiments. The goal is

to provide you with the most useful defaults.

Under the hood, the

quickstart_training.jinja

template defines the different combinations – for example, which parameters to

change if the pipeline should optimize for efficiency vs. accuracy. The file

quickstart_training_recommendations.yml

collects the recommended settings and available resources for each language

including the different transformer weights. For some languages, we include

different transformer recommendations, depending on whether you want the model

to be more efficient or more accurate. The recommendations will be evolving

as we run more experiments.

The easiest way to get started is to clone a project template and run it – for example, this end-to-end template that lets you train a part-of-speech tagger and dependency parser on a Universal Dependencies treebank.

Training config system

Training config files include all settings and hyperparameters for training

your pipeline. Instead of providing lots of arguments on the command line, you

only need to pass your config.cfg file to spacy train.

Under the hood, the training config uses the

configuration system provided by our

machine learning library Thinc. This also makes it easy to

integrate custom models and architectures, written in your framework of choice.

Some of the main advantages and features of spaCy's training config are:

- Structured sections. The config is grouped into sections, and nested

sections are defined using the

.notation. For example,[components.ner]defines the settings for the pipeline's named entity recognizer. The config can be loaded as a Python dict. - References to registered functions. Sections can refer to registered functions like model architectures, optimizers or schedules and define arguments that are passed into them. You can also register your own functions to define custom architectures or methods, reference them in your config and tweak their parameters.

- Interpolation. If you have hyperparameters or other settings used by multiple components, define them once and reference them as variables.

- Reproducibility with no hidden defaults. The config file is the "single source of truth" and includes all settings.

- Automated checks and validation. When you load a config, spaCy checks if the settings are complete and if all values have the correct types. This lets you catch potential mistakes early. In your custom architectures, you can use Python type hints to tell the config which types of data to expect.

%%GITHUB_SPACY/spacy/default_config.cfg

Under the hood, the config is parsed into a dictionary. It's divided into

sections and subsections, indicated by the square brackets and dot notation. For

example, [training] is a section and [training.batch_size] a subsection.

Subsections can define values, just like a dictionary, or use the @ syntax to

refer to registered functions. This allows the config to

not just define static settings, but also construct objects like architectures,

schedules, optimizers or any other custom components. The main top-level

sections of a config file are:

| Section | Description |

|---|---|

nlp |

Definition of the nlp object, its tokenizer and processing pipeline component names. |

components |

Definitions of the pipeline components and their models. |

paths |

Paths to data and other assets. Re-used across the config as variables, e.g. ${paths.train}, and can be overwritten on the CLI. |

system |

Settings related to system and hardware. Re-used across the config as variables, e.g. ${system.seed}, and can be overwritten on the CLI. |

training |

Settings and controls for the training and evaluation process. |

pretraining |

Optional settings and controls for the language model pretraining. |

initialize |

Data resources and arguments passed to components when nlp.initialize is called before training (but not at runtime). |

For a full overview of spaCy's config format and settings, see the data format documentation and Thinc's config system docs. The settings available for the different architectures are documented with the model architectures API. See the Thinc documentation for optimizers and schedules.

Config lifecycle at runtime and training

A pipeline's config.cfg is considered the "single source of truth", both at

training and runtime. Under the hood,

Language.from_config takes care of constructing

the nlp object using the settings defined in the config. An nlp object's

config is available as nlp.config and it includes all

information about the pipeline, as well as the settings used to train and

initialize it.

At runtime spaCy will only use the [nlp] and [components] blocks of the

config and load all data, including tokenization rules, model weights and other

resources from the pipeline directory. The [training] block contains the

settings for training the model and is only used during training. Similarly, the

[initialize] block defines how the initial nlp object should be set up

before training and whether it should be initialized with vectors or pretrained

tok2vec weights, or any other data needed by the components.

The initialization settings are only loaded and used when

nlp.initialize is called (typically right before

training). This allows you to set up your pipeline using local data resources

and custom functions, and preserve the information in your config – but without

requiring it to be available at runtime. You can also use this mechanism to

provide data paths to custom pipeline components and custom tokenizers – see the

section on custom initialization for details.

Overwriting config settings on the command line

The config system means that you can define all settings in one place and in

a consistent format. There are no command-line arguments that need to be set,

and no hidden defaults. However, there can still be scenarios where you may want

to override config settings when you run spacy train. This

includes file paths to vectors or other resources that shouldn't be

hard-coded in a config file, or system-dependent settings.

For cases like this, you can set additional command-line options starting with

-- that correspond to the config section and value to override. For example,

--paths.train ./corpus/train.spacy sets the train value in the [paths]

block.

$ python -m spacy train config.cfg --paths.train ./corpus/train.spacy --paths.dev ./corpus/dev.spacy --training.batch_size 128

Only existing sections and values in the config can be overwritten. At the end

of the training, the final filled config.cfg is exported with your pipeline,

so you'll always have a record of the settings that were used, including your

overrides. Overrides are added before variables are

resolved, by the way – so if you need to use a value in multiple places,

reference it across your config and override it on the CLI once.

💡 Tip: Verbose logging

If you're using config overrides, you can set the

--verboseflag onspacy trainto make spaCy log more info, including which overrides were set via the CLI and environment variables.

Adding overrides via environment variables

Instead of defining the overrides as CLI arguments, you can also use the

SPACY_CONFIG_OVERRIDES environment variable using the same argument syntax.

This is especially useful if you're training models as part of an automated

process. Environment variables take precedence over CLI overrides and values

defined in the config file.

$ SPACY_CONFIG_OVERRIDES="--system.gpu_allocator pytorch --training.batch_size 128" ./your_script.sh

Reading from standard input

Setting the config path to - on the command line lets you read the config from

standard input and pipe it forward from a different process, like

init config or your own custom script. This is

especially useful for quick experiments, as it lets you generate a config on the

fly without having to save to and load from disk.

💡 Tip: Writing to stdout

When you run

init config, you can set the output path to-to write to stdout. In a custom script, you can print the string config, e.g.print(nlp.config.to_str()).

$ python -m spacy init config - --lang en --pipeline ner,textcat --optimize accuracy | python -m spacy train - --paths.train ./corpus/train.spacy --paths.dev ./corpus/dev.spacy

Using variable interpolation

Another very useful feature of the config system is that it supports variable

interpolation for both values and sections. This means that you only need to

define a setting once and can reference it across your config using the

${section.value} syntax. In this example, the value of seed is reused within

the [training] block, and the whole block of [training.optimizer] is reused

in [pretraining] and will become pretraining.optimizer.

### config.cfg (excerpt) {highlight="5,18"}

[system]

seed = 0

[training]

seed = ${system.seed}

[training.optimizer]

@optimizers = "Adam.v1"

beta1 = 0.9

beta2 = 0.999

L2_is_weight_decay = true

L2 = 0.01

grad_clip = 1.0

use_averages = false

eps = 1e-8

[pretraining]

optimizer = ${training.optimizer}

You can also use variables inside strings. In that case, it works just like f-strings in Python. If the value of a variable is not a string, it's converted to a string.

[paths]

version = 5

root = "/Users/you/data"

train = "${paths.root}/train_${paths.version}.spacy"

# Result: /Users/you/data/train_5.spacy

If you need to change certain values between training runs, you can define them

once, reference them as variables and then override them on

the CLI. For example, --paths.root /other/root will change the value of root

in the block [paths] and the change will be reflected across all other values

that reference this variable.

Preparing Training Data

Training data for NLP projects comes in many different formats. For some common formats such as CoNLL, spaCy provides converters you can use from the command line. In other cases you'll have to prepare the training data yourself.

When converting training data for use in spaCy, the main thing is to create

Doc objects just like the results you want as output from the

pipeline. For example, if you're creating an NER pipeline, loading your

annotations and setting them as the .ents property on a Doc is all you need

to worry about. On disk the annotations will be saved as a

DocBin in the

.spacy format, but the details of that

are handled automatically.

Here's an example of creating a .spacy file from some NER annotations.

### preprocess.py

import spacy

from spacy.tokens import DocBin

nlp = spacy.blank("en")

training_data = [

("Tokyo Tower is 333m tall.", [(0, 11, "BUILDING")]),

]

# the DocBin will store the example documents

db = DocBin()

for text, annotations in training_data:

doc = nlp(text)

ents = []

for start, end, label in annotations:

span = doc.char_span(start, end, label=label)

ents.append(span)

doc.ents = ents

db.add(doc)

db.to_disk("./train.spacy")

For more examples of how to convert training data from a wide variety of formats for use with spaCy, look at the preprocessing steps in the tutorial projects.

In spaCy v2, the recommended way to store training data was in

a particular JSON format, but in v3 this format

is deprecated. It's fine as a readable storage format, but there's no need to

convert your data to JSON before creating a .spacy file.

Customizing the pipeline and training

Defining pipeline components

You typically train a pipeline of one or more

components. The [components] block in the config defines the available

pipeline components and how they should be created – either by a built-in or

custom factory, or

sourced from an existing

trained pipeline. For example, [components.parser] defines the component named

"parser" in the pipeline. There are different ways you might want to treat

your components during training, and the most common scenarios are:

- Train a new component from scratch on your data.

- Update an existing trained component with more examples.

- Include an existing trained component without updating it.

- Include a non-trainable component, like a rule-based

SpanRulerorSentencizer, or a fully custom component.

If a component block defines a factory, spaCy will look it up in the

built-in or

custom components and create a

new component from scratch. All settings defined in the config block will be

passed to the component factory as arguments. This lets you configure the model

settings and hyperparameters. If a component block defines a source, the

component will be copied over from an existing trained pipeline, with its

existing weights. This lets you include an already trained component in your

pipeline, or update a trained component with more data specific to your use

case.

### config.cfg (excerpt)

[components]

# "parser" and "ner" are sourced from a trained pipeline

[components.parser]

source = "en_core_web_sm"

[components.ner]

source = "en_core_web_sm"

# "textcat" and "custom" are created blank from a built-in / custom factory

[components.textcat]

factory = "textcat"

[components.custom]

factory = "your_custom_factory"

your_custom_setting = true

The pipeline setting in the [nlp] block defines the pipeline components

added to the pipeline, in order. For example, "parser" here references

[components.parser]. By default, spaCy will update all components that can

be updated. Trainable components that are created from scratch are initialized

with random weights. For sourced components, spaCy will keep the existing

weights and resume training.

If you don't want a component to be updated, you can freeze it by adding it

to the frozen_components list in the [training] block. Frozen components are

not updated during training and are included in the final trained pipeline

as-is. They are also excluded when calling

nlp.initialize.

Note on frozen components

Even though frozen components are not updated during training, they will still run during evaluation. This is very important, because they may still impact your model's performance – for instance, a sentence boundary detector can impact what the parser or entity recognizer considers a valid parse. So the evaluation results should always reflect what your pipeline will produce at runtime. If you want a frozen component to run (without updating) during training as well, so that downstream components can use its predictions, you should add it to the list of

annotating_components.

[nlp]

lang = "en"

pipeline = ["parser", "ner", "textcat", "custom"]

[training]

frozen_components = ["parser", "custom"]

When the components in your pipeline

share an embedding layer, the

performance of your frozen component will be degraded if you continue

training other layers with the same underlying Tok2Vec instance. As a rule of

thumb, ensure that your frozen components are truly independent in the

pipeline.

To automatically replace a shared token-to-vector listener with an independent

copy of the token-to-vector layer, you can use the replace_listeners setting

of a sourced component, pointing to the listener layer(s) in the config. For

more details on how this works under the hood, see

Language.replace_listeners.

[training]

frozen_components = ["tagger"]

[components.tagger]

source = "en_core_web_sm"

replace_listeners = ["model.tok2vec"]

Using predictions from preceding components

By default, components are updated in isolation during training, which means

that they don't see the predictions of any earlier components in the pipeline. A

component receives Example.predicted as input and compares its

predictions to Example.reference without saving its

annotations in the predicted doc.

Instead, if certain components should set their annotations during training,

use the setting annotating_components in the [training] block to specify a

list of components. For example, the feature DEP from the parser could be used

as a tagger feature by including DEP in the tok2vec attrs and including

parser in annotating_components:

### config.cfg (excerpt) {highlight="7,12"}

[nlp]

pipeline = ["parser", "tagger"]

[components.tagger.model.tok2vec.embed]

@architectures = "spacy.MultiHashEmbed.v1"

width = ${components.tagger.model.tok2vec.encode.width}

attrs = ["NORM","DEP"]

rows = [5000,2500]

include_static_vectors = false

[training]

annotating_components = ["parser"]

Any component in the pipeline can be included as an annotating component,

including frozen components. Frozen components can set annotations during

training just as they would set annotations during evaluation or when the final

pipeline is run. The config excerpt below shows how a frozen ner component and

a sentencizer can provide the required doc.sents and doc.ents for the

entity linker during training:

### config.cfg (excerpt)

[nlp]

pipeline = ["sentencizer", "ner", "entity_linker"]

[components.ner]

source = "en_core_web_sm"

[training]

frozen_components = ["ner"]

annotating_components = ["sentencizer", "ner"]

Similarly, a pretrained tok2vec layer can be frozen and specified in the list

of annotating_components to ensure that a downstream component can use the

embedding layer without updating it.

Be aware that non-frozen annotating components with statistical models will run twice on each batch, once to update the model and once to apply the now-updated model to the predicted docs.

Using registered functions

The training configuration defined in the config file doesn't have to only

consist of static values. Some settings can also be functions. For instance,

the batch_size can be a number that doesn't change, or a schedule, like a

sequence of compounding values, which has shown to be an effective trick (see

Smith et al., 2017).

### With static value

[training]

batch_size = 128

To refer to a function instead, you can make [training.batch_size] its own

section and use the @ syntax to specify the function and its arguments – in

this case compounding.v1

defined in the function registry. All other values

defined in the block are passed to the function as keyword arguments when it's

initialized. You can also use this mechanism to register

custom implementations and architectures and reference them

from your configs.

How the config is resolved

The config file is parsed into a regular dictionary and is resolved and validated bottom-up. Arguments provided for registered functions are checked against the function's signature and type annotations. The return value of a registered function can also be passed into another function – for instance, a learning rate schedule can be provided as the an argument of an optimizer.

### With registered function

[training.batch_size]

@schedules = "compounding.v1"

start = 100

stop = 1000

compound = 1.001

Model architectures

💡 Model type annotations

In the documentation and code base, you may come across type annotations and descriptions of Thinc model types, like

Model[List[Doc], List[Floats2d]]. This so-called generic type describes the layer and its input and output type – in this case, it takes a list ofDocobjects as the input and list of 2-dimensional arrays of floats as the output. You can read more about defining Thinc models here. Also see the type checking for how to enable linting in your editor to see live feedback if your inputs and outputs don't match.

A model architecture is a function that wires up a Thinc

Model instance, which you can then use in a

component or as a layer of a larger network. You can use Thinc as a thin

wrapper around frameworks such as

PyTorch, TensorFlow or MXNet, or you can implement your logic in Thinc

directly. For more details and examples,

see the usage guide on layers and architectures.

spaCy's built-in components will never construct their Model instances

themselves, so you won't have to subclass the component to change its model

architecture. You can just update the config so that it refers to a

different registered function. Once the component has been created, its Model

instance has already been assigned, so you cannot change its model architecture.

The architecture is like a recipe for the network, and you can't change the

recipe once the dish has already been prepared. You have to make a new one.

spaCy includes a variety of built-in architectures for

different tasks. For example:

| Architecture | Description |

|---|---|

| HashEmbedCNN | Build spaCy’s "standard" embedding layer, which uses hash embedding with subword features and a CNN with layer-normalized maxout. |

| TransitionBasedParser | Build a transition-based parser model used in the default EntityRecognizer and DependencyParser. |

| TextCatEnsemble | Stacked ensemble of a bag-of-words model and a neural network model with an internal CNN embedding layer. Used in the default TextCategorizer. |

Metrics, training output and weighted scores

When you train a pipeline using the spacy train command,

you'll see a table showing the metrics after each pass over the data. The

available metrics depend on the pipeline components. Pipeline components

also define which scores are shown and how they should be weighted in the

final score that decides about the best model.

The training.score_weights setting in your config.cfg lets you customize the

scores shown in the table and how they should be weighted. In this example, the

labeled dependency accuracy and NER F-score count towards the final score with

40% each and the tagging accuracy makes up the remaining 20%. The tokenization

accuracy and speed are both shown in the table, but not counted towards the

score.

Why do I need score weights?

At the end of your training process, you typically want to select the best model – but what "best" means depends on the available components and your specific use case. For instance, you may prefer a pipeline with higher NER and lower POS tagging accuracy over a pipeline with lower NER and higher POS accuracy. You can express this preference in the score weights, e.g. by assigning

ents_f(NER F-score) a higher weight.

[training.score_weights]

dep_las = 0.4

dep_uas = null

ents_f = 0.4

tag_acc = 0.2

token_acc = 0.0

speed = 0.0

The score_weights don't have to sum to 1.0 – but it's recommended. When

you generate a config for a given pipeline, the score weights are generated by

combining and normalizing the default score weights of the pipeline components.

The default score weights are defined by each pipeline component via the

default_score_weights setting on the

@Language.factory decorator. By default, all pipeline

components are weighted equally. If a score weight is set to null, it will be

excluded from the logs and the score won't be weighted.

| Name | Description |

|---|---|

| Loss | The training loss representing the amount of work left for the optimizer. Should decrease, but usually not to 0. |

| Precision (P) | Percentage of predicted annotations that were correct. Should increase. |

| Recall (R) | Percentage of reference annotations recovered. Should increase. |

| F-Score (F) | Harmonic mean of precision and recall. Should increase. |

| UAS / LAS | Unlabeled and labeled attachment score for the dependency parser, i.e. the percentage of correct arcs. Should increase. |

| Speed | Prediction speed in words per second (WPS). Should stay stable. |

Note that if the development data has raw text, some of the gold-standard entities might not align to the predicted tokenization. These tokenization errors are excluded from the NER evaluation. If your tokenization makes it impossible for the model to predict 50% of your entities, your NER F-score might still look good.

Custom functions

Registered functions in the training config files can refer to built-in

implementations, but you can also plug in fully custom implementations. All

you need to do is register your function using the @spacy.registry decorator

with the name of the respective registry, e.g.

@spacy.registry.architectures, and a string name to assign to your function.

Registering custom functions allows you to plug in models defined in PyTorch

or TensorFlow, make custom modifications to the nlp object, create custom

optimizers or schedules, or stream in data and preprocess it on the fly

while training.

Each custom function can have any number of arguments that are passed in via the

config, just the built-in functions. If your function defines

default argument values, spaCy is able to auto-fill your config when you run

init fill-config. If you want to make sure that a

given parameter is always explicitly set in the config, avoid setting a default

value for it.

Training with custom code

### Training $ python -m spacy train config.cfg --code functions.py### Packaging $ python -m spacy package ./model-best ./packages --code functions.py

The spacy train recipe lets you specify an optional argument

--code that points to a Python file. The file is imported before training and

allows you to add custom functions and architectures to the function registry

that can then be referenced from your config.cfg. This lets you train spaCy

pipelines with custom components, without having to re-implement the whole

training workflow. When you package your trained pipeline later using

spacy package, you can provide one or more Python files to

be included in the package and imported in its __init__.py. This means that

any custom architectures, functions or

components will be shipped with

your pipeline and registered when it's loaded. See the documentation on

saving and loading pipelines for details.

Example: Modifying the nlp object

For many use cases, you don't necessarily want to implement the whole Language

subclass and language data from scratch – it's often enough to make a few small

modifications, like adjusting the

tokenization rules or

language defaults like stop words. The config lets you

provide five optional callback functions that give you access to the

language class and nlp object at different points of the lifecycle:

| Callback | Description |

|---|---|

nlp.before_creation |

Called before the nlp object is created and receives the language subclass like English (not the instance). Useful for writing to the Language.Defaults aside from the tokenizer settings. |

nlp.after_creation |

Called right after the nlp object is created, but before the pipeline components are added to the pipeline and receives the nlp object. |

nlp.after_pipeline_creation |

Called right after the pipeline components are created and added and receives the nlp object. Useful for modifying pipeline components. |

initialize.before_init |

Called before the pipeline components are initialized and receives the nlp object for in-place modification. Useful for modifying the tokenizer settings, similar to the v2 base model option. |

initialize.after_init |

Called after the pipeline components are initialized and receives the nlp object for in-place modification. |

The @spacy.registry.callbacks decorator lets you register your custom function

in the callbacks registry under a given name. You

can then reference the function in a config block using the @callbacks key. If

a block contains a key starting with an @, it's interpreted as a reference to

a function. Because you've registered the function, spaCy knows how to create it

when you reference "customize_language_data" in your config. Here's an example

of a callback that runs before the nlp object is created and adds a custom

stop word to the defaults:

config.cfg

[nlp.before_creation] @callbacks = "customize_language_data"

### functions.py {highlight="3,6"}

import spacy

@spacy.registry.callbacks("customize_language_data")

def create_callback():

def customize_language_data(lang_cls):

lang_cls.Defaults.stop_words.add("good")

return lang_cls

return customize_language_data

Remember that a registered function should always be a function that spaCy calls to create something. In this case, it creates a callback – it's not the callback itself.

Any registered function – in this case create_callback – can also take

arguments that can be set by the config. This lets you implement and

keep track of different configurations, without having to hack at your code. You

can choose any arguments that make sense for your use case. In this example,

we're adding the arguments extra_stop_words (a list of strings) and debug

(boolean) for printing additional info when the function runs.

config.cfg

[nlp.before_creation] @callbacks = "customize_language_data" extra_stop_words = ["ooh", "aah"] debug = true

### functions.py {highlight="5,7-9"}

from typing import List

import spacy

@spacy.registry.callbacks("customize_language_data")

def create_callback(extra_stop_words: List[str] = [], debug: bool = False):

def customize_language_data(lang_cls):

lang_cls.Defaults.stop_words.update(extra_stop_words)

if debug:

print("Updated stop words")

return lang_cls

return customize_language_data

spaCy's configs are powered by our machine learning library Thinc's

configuration system, which supports

type hints and even

advanced type annotations

using pydantic. If your registered

function provides type hints, the values that are passed in will be checked

against the expected types. For example, debug: bool in the example above will

ensure that the value received as the argument debug is a boolean. If the

value can't be coerced into a boolean, spaCy will raise an error.

debug: pydantic.StrictBool will force the value to be a boolean and raise an

error if it's not – for instance, if your config defines 1 instead of true.

With your functions.py defining additional code and the updated config.cfg,

you can now run spacy train and point the argument --code

to your Python file. Before loading the config, spaCy will import the

functions.py module and your custom functions will be registered.

$ python -m spacy train config.cfg --output ./output --code ./functions.py

Example: Modifying tokenizer settings

Use the initialize.before_init callback to modify the tokenizer settings when

training a new pipeline. Write a registered callback that modifies the tokenizer

settings and specify this callback in your config:

config.cfg

[initialize] [initialize.before_init] @callbacks = "customize_tokenizer"

### functions.py

from spacy.util import registry, compile_suffix_regex

@registry.callbacks("customize_tokenizer")

def make_customize_tokenizer():

def customize_tokenizer(nlp):

# remove a suffix

suffixes = list(nlp.Defaults.suffixes)

suffixes.remove("\\[")

suffix_regex = compile_suffix_regex(suffixes)

nlp.tokenizer.suffix_search = suffix_regex.search

# add a special case

nlp.tokenizer.add_special_case("_SPECIAL_", [{"ORTH": "_SPECIAL_"}])

return customize_tokenizer

When training, provide the function above with the --code option:

$ python -m spacy train config.cfg --code ./functions.py

Because this callback is only called in the one-time initialization step before training, the callback code does not need to be packaged with the final pipeline package. However, to make it easier for others to replicate your training setup, you can choose to package the initialization callbacks with the pipeline package or to publish them separately.

nlp.before_creationis the best place to modify language defaults other than the tokenizer settings.initialize.before_initis the best place to modify tokenizer settings when training a new pipeline.

Unlike the other language defaults, the tokenizer settings are saved with the

pipeline with nlp.to_disk(), so modifications made in nlp.before_creation

will be clobbered by the saved settings when the trained pipeline is loaded from

disk.

Example: Custom logging function

During training, the results of each step are passed to a logger function. By

default, these results are written to the console with the

ConsoleLogger. There is also built-in support

for writing the log files to Weights & Biases with the

WandbLogger. On each

step, the logger function receives a dictionary with the following keys:

| Key | Value |

|---|---|

epoch |

How many passes over the data have been completed. |

step |

How many steps have been completed. |

score |

The main score from the last evaluation, measured on the dev set. |

other_scores |

The other scores from the last evaluation, measured on the dev set. |

losses |

The accumulated training losses, keyed by component name. |

checkpoints |

A list of previous results, where each result is a (score, step) tuple. |

You can easily implement and plug in your own logger that records the training

results in a custom way, or sends them to an experiment management tracker of

your choice. In this example, the function my_custom_logger.v1 writes the

tabular results to a file:

### config.cfg (excerpt) [training.logger] @loggers = "my_custom_logger.v1" log_path = "my_file.tab"

### functions.py

import sys

from typing import IO, Tuple, Callable, Dict, Any, Optional

import spacy

from spacy import Language

from pathlib import Path

@spacy.registry.loggers("my_custom_logger.v1")

def custom_logger(log_path):

def setup_logger(

nlp: Language,

stdout: IO=sys.stdout,

stderr: IO=sys.stderr

) -> Tuple[Callable, Callable]:

stdout.write(f"Logging to {log_path}\\n")

log_file = Path(log_path).open("w", encoding="utf8")

log_file.write("step\\t")

log_file.write("score\\t")

for pipe in nlp.pipe_names:

log_file.write(f"loss_{pipe}\\t")

log_file.write("\\n")

def log_step(info: Optional[Dict[str, Any]]):

if info:

log_file.write(f"{info['step']}\\t")

log_file.write(f"{info['score']}\\t")

for pipe in nlp.pipe_names:

log_file.write(f"{info['losses'][pipe]}\\t")

log_file.write("\\n")

def finalize():

log_file.close()

return log_step, finalize

return setup_logger

Example: Custom batch size schedule

You can also implement your own batch size schedule to use during training. The

@spacy.registry.schedules decorator lets you register that function in the

schedules registry and assign it a string name:

Why the version in the name?

A big benefit of the config system is that it makes your experiments reproducible. We recommend versioning the functions you register, especially if you expect them to change (like a new model architecture). This way, you know that a config referencing

v1means a different function than a config referencingv2.

### functions.py

import spacy

@spacy.registry.schedules("my_custom_schedule.v1")

def my_custom_schedule(start: int = 1, factor: float = 1.001):

while True:

yield start

start = start * factor

In your config, you can now reference the schedule in the

[training.batch_size] block via @schedules. If a block contains a key

starting with an @, it's interpreted as a reference to a function. All other

settings in the block will be passed to the function as keyword arguments. Keep

in mind that the config shouldn't have any hidden defaults and all arguments on

the functions need to be represented in the config.

### config.cfg (excerpt)

[training.batch_size]

@schedules = "my_custom_schedule.v1"

start = 2

factor = 1.005

Defining custom architectures

Built-in pipeline components such as the tagger or named entity recognizer are constructed with default neural network models. You can change the model architecture entirely by implementing your own custom models and providing those in the config when creating the pipeline component. See the documentation on layers and model architectures for more details.

### config.cfg [components.tagger] factory = "tagger" [components.tagger.model] @architectures = "custom_neural_network.v1" output_width = 512

### functions.py

from typing import List

from thinc.types import Floats2d

from thinc.api import Model

import spacy

from spacy.tokens import Doc

@spacy.registry.architectures("custom_neural_network.v1")

def custom_neural_network(output_width: int) -> Model[List[Doc], List[Floats2d]]:

return create_model(output_width)

Customizing the initialization

When you start training a new model from scratch,

spacy train will call

nlp.initialize to initialize the pipeline and load

the required data. All settings for this are defined in the

[initialize] block of the config, so

you can keep track of how the initial nlp object was created. The

initialization process typically includes the following:

config.cfg (excerpt)

[initialize] vectors = ${paths.vectors} init_tok2vec = ${paths.init_tok2vec} [initialize.components] # Settings for components

- Load in data resources defined in the

[initialize]config, including word vectors and pretrained tok2vec weights. - Call the

initializemethods of the tokenizer (if implemented, e.g. for Chinese) and pipeline components with a callback to access the training data, the currentnlpobject and any custom arguments defined in the[initialize]config. - In pipeline components: if needed, use the data to infer missing shapes and set up the label scheme if no labels are provided. Components may also load other data like lookup tables or dictionaries.

The initialization step allows the config to define all settings required

for the pipeline, while keeping a separation between settings and functions that

should only be used before training to set up the initial pipeline, and

logic and configuration that needs to be available at runtime. Without that

separation, it would be very difficult to use the same, reproducible config file

because the component settings required for training (load data from an external

file) wouldn't match the component settings required at runtime (load what's

included with the saved nlp object and don't depend on external file).

For details and examples of how pipeline components can save and load data assets like model weights or lookup tables, and how the component initialization is implemented under the hood, see the usage guide on serializing and initializing component data.

Initializing labels

Built-in pipeline components like the

EntityRecognizer or

DependencyParser need to know their available labels

and associated internal meta information to initialize their model weights.

Using the get_examples callback provided on initialization, they're able to

read the labels off the training data automatically, which is very

convenient – but it can also slow down the training process to compute this

information on every run.

The init labels command lets you auto-generate JSON

files containing the label data for all supported components. You can then pass

in the labels in the [initialize] settings for the respective components to

allow them to initialize faster.

config.cfg

[initialize.components.ner] [initialize.components.ner.labels] @readers = "spacy.read_labels.v1" path = "corpus/labels/ner.json

$ python -m spacy init labels config.cfg ./corpus --paths.train ./corpus/train.spacy

Under the hood, the command delegates to the label_data property of the

pipeline components, for instance

EntityRecognizer.label_data.

The JSON format differs for each component and some components need additional

meta information about their labels. The format exported by

init labels matches what the components need, so you

should always let spaCy auto-generate the labels for you.

Data utilities

spaCy includes various features and utilities to make it easy to train models using your own data, manage training and evaluation corpora, convert existing annotations and configure data augmentation strategies for more robust models.

Converting existing corpora and annotations

If you have training data in a standard format like .conll or .conllu, the

easiest way to convert it for use with spaCy is to run

spacy convert and pass it a file and an output directory.

By default, the command will pick the converter based on the file extension.

$ python -m spacy convert ./train.gold.conll ./corpus

💡 Tip: Converting from Prodigy

If you're using the Prodigy annotation tool to create training data, you can run the

data-to-spacycommand to merge and export multiple datasets for use withspacy train. Different types of annotations on the same text will be combined, giving you one corpus to train multiple components.

Training workflows often consist of multiple steps, from preprocessing the data all the way to packaging and deploying the trained model. spaCy projects let you define all steps in one file, manage data assets, track changes and share your end-to-end processes with your team.

The binary .spacy format is a serialized DocBin containing

one or more Doc objects. It's extremely efficient in storage,

especially when packing multiple documents together. You can also create Doc

objects manually, so you can write your own custom logic to convert and store

existing annotations for use in spaCy.

### Training data from Doc objects {highlight="6-9"}

import spacy

from spacy.tokens import Doc, DocBin

nlp = spacy.blank("en")

docbin = DocBin()

words = ["Apple", "is", "looking", "at", "buying", "U.K.", "startup", "."]

spaces = [True, True, True, True, True, True, True, False]

ents = ["B-ORG", "O", "O", "O", "O", "B-GPE", "O", "O"]

doc = Doc(nlp.vocab, words=words, spaces=spaces, ents=ents)

docbin.add(doc)

docbin.to_disk("./train.spacy")

Working with corpora

Example

[corpora] [corpora.train] @readers = "spacy.Corpus.v1" path = ${paths.train} gold_preproc = false max_length = 0 limit = 0 augmenter = null [training] train_corpus = "corpora.train"

The [corpora] block in your config lets

you define data resources to use for training, evaluation, pretraining or

any other custom workflows. corpora.train and corpora.dev are used as

conventions within spaCy's default configs, but you can also define any other

custom blocks. Each section in the corpora config should resolve to a

Corpus – for example, using spaCy's built-in

corpus reader that takes a path to a binary

.spacy file. The train_corpus and dev_corpus fields in the

[training] block specify where to find

the corpus in your config. This makes it easy to swap out different corpora

by only changing a single config setting.

Instead of making [corpora] a block with multiple subsections for each portion

of the data, you can also use a single function that returns a dictionary of

corpora, keyed by corpus name, e.g. "train" and "dev". This can be

especially useful if you need to split a single file into corpora for training

and evaluation, without loading the same file twice.

By default, the training data is loaded into memory and shuffled before each epoch. If the corpus is too large to fit into memory during training, stream the corpus using a custom reader as described in the next section.

Custom data reading and batching

Some use-cases require streaming in data or manipulating datasets on the

fly, rather than generating all data beforehand and storing it to disk. Instead

of using the built-in Corpus reader, which uses static file

paths, you can create and register a custom function that generates

Example objects.

In the following example we assume a custom function read_custom_data which

loads or generates texts with relevant text classification annotations. Then,

small lexical variations of the input text are created before generating the

final Example objects. The @spacy.registry.readers decorator

lets you register the function creating the custom reader in the readers

registry and assign it a string name, so it can be

used in your config. All arguments on the registered function become available

as config settings – in this case, source.

config.cfg

[corpora.train] @readers = "corpus_variants.v1" source = "s3://your_bucket/path/data.csv"

### functions.py {highlight="7-8"}

from typing import Callable, Iterator, List

import spacy

from spacy.training import Example

from spacy.language import Language

import random

@spacy.registry.readers("corpus_variants.v1")

def stream_data(source: str) -> Callable[[Language], Iterator[Example]]:

def generate_stream(nlp):

for text, cats in read_custom_data(source):

# Create a random variant of the example text

i = random.randint(0, len(text) - 1)

variant = text[:i] + text[i].upper() + text[i + 1:]

doc = nlp.make_doc(variant)

example = Example.from_dict(doc, {"cats": cats})

yield example

return generate_stream

Remember that a registered function should always be a function that spaCy calls to create something. In this case, it creates the reader function – it's not the reader itself.

If the corpus is too large to load into memory or the corpus reader is an

infinite generator, use the setting max_epochs = -1 to indicate that the

train corpus should be streamed. With this setting the train corpus is merely

streamed and batched, not shuffled, so any shuffling needs to be implemented in

the corpus reader itself. In the example below, a corpus reader that generates

sentences containing even or odd numbers is used with an unlimited number of

examples for the train corpus and a limited number of examples for the dev

corpus. The dev corpus should always be finite and fit in memory during the

evaluation step. max_steps and/or patience are used to determine when the

training should stop.

config.cfg

[corpora.dev] @readers = "even_odd.v1" limit = 100 [corpora.train] @readers = "even_odd.v1" limit = -1 [training] max_epochs = -1 patience = 500 max_steps = 2000

### functions.py

from typing import Callable, Iterable, Iterator

from spacy import util

import random

from spacy.training import Example

from spacy import Language

@util.registry.readers("even_odd.v1")

def create_even_odd_corpus(limit: int = -1) -> Callable[[Language], Iterable[Example]]:

return EvenOddCorpus(limit)

class EvenOddCorpus:

def __init__(self, limit):

self.limit = limit

def __call__(self, nlp: Language) -> Iterator[Example]:

i = 0

while i < self.limit or self.limit < 0:

r = random.randint(0, 1000)

cat = r % 2 == 0

text = "This is sentence " + str(r)

yield Example.from_dict(

nlp.make_doc(text), {"cats": {"EVEN": cat, "ODD": not cat}}

)

i += 1

config.cfg

[initialize.components.textcat.labels] @readers = "spacy.read_labels.v1" path = "labels/textcat.json" require = true

If the train corpus is streamed, the initialize step peeks at the first 100

examples in the corpus to find the labels for each component. If this isn't

sufficient, you'll need to provide the labels for each

component in the [initialize] block. init labels can

be used to generate JSON files in the correct format, which you can extend with

the full label set.

We can also customize the batching strategy by registering a new batcher

function in the batchers registry. A batcher turns

a stream of items into a stream of batches. spaCy has several useful built-in

batching strategies with customizable sizes, but it's

also easy to implement your own. For instance, the following function takes the

stream of generated Example objects, and removes those which

have the same underlying raw text, to avoid duplicates within each batch. Note

that in a more realistic implementation, you'd also want to check whether the

annotations are the same.

config.cfg

[training.batcher] @batchers = "filtering_batch.v1" size = 150

### functions.py

from typing import Callable, Iterable, Iterator, List

import spacy

from spacy.training import Example

@spacy.registry.batchers("filtering_batch.v1")

def filter_batch(size: int) -> Callable[[Iterable[Example]], Iterator[List[Example]]]:

def create_filtered_batches(examples):

batch = []

for eg in examples:

# Remove duplicate examples with the same text from batch

if eg.text not in [x.text for x in batch]:

batch.append(eg)

if len(batch) == size:

yield batch

batch = []

return create_filtered_batches

Data augmentation

Data augmentation is the process of applying small modifications to the training data. It can be especially useful for punctuation and case replacement – for example, if your corpus only uses smart quotes and you want to include variations using regular quotes, or to make the model less sensitive to capitalization by including a mix of capitalized and lowercase examples.

The easiest way to use data augmentation during training is to provide an

augmenter to the training corpus, e.g. in the [corpora.train] section of

your config. The built-in orth_variants

augmenter creates a data augmentation callback that uses orth-variant

replacement.

### config.cfg (excerpt) {highlight="8,14"}

[corpora.train]

@readers = "spacy.Corpus.v1"

path = ${paths.train}

gold_preproc = false

max_length = 0

limit = 0

[corpora.train.augmenter]

@augmenters = "spacy.orth_variants.v1"

# Percentage of texts that will be augmented / lowercased

level = 0.1

lower = 0.5

[corpora.train.augmenter.orth_variants]

@readers = "srsly.read_json.v1"

path = "corpus/orth_variants.json"

The orth_variants argument lets you pass in a dictionary of replacement rules,

typically loaded from a JSON file. There are two types of orth variant rules:

"single" for single tokens that should be replaced (e.g. hyphens) and

"paired" for pairs of tokens (e.g. quotes).

### orth_variants.json

{

"single": [{ "tags": ["NFP"], "variants": ["…", "..."] }],

"paired": [{ "tags": ["``", "''"], "variants": [["'", "'"], ["‘", "’"]] }]

}

https://github.com/explosion/spacy-lookups-data/blob/master/spacy_lookups_data/data/en_orth_variants.json

https://github.com/explosion/spacy-lookups-data/blob/master/spacy_lookups_data/data/de_orth_variants.json

When adding data augmentation, keep in mind that it typically only makes sense to apply it to the training corpus, not the development data.

Writing custom data augmenters

Using the @spacy.augmenters registry, you can also

register your own data augmentation callbacks. The callback should be a function

that takes the current nlp object and a training Example and

yields Example objects. Keep in mind that the augmenter should yield all

examples you want to use in your corpus, not only the augmented examples

(unless you want to augment all examples).

Here'a an example of a custom augmentation callback that produces text variants

in "SpOnGeBoB cAsE". The

registered function takes one argument randomize that can be set via the

config and decides whether the uppercase/lowercase transformation is applied

randomly or not. The augmenter yields two Example objects: the original

example and the augmented example.

config.cfg

[corpora.train.augmenter] @augmenters = "spongebob_augmenter.v1" randomize = false

import spacy

import random

@spacy.registry.augmenters("spongebob_augmenter.v1")

def create_augmenter(randomize: bool = False):

def augment(nlp, example):

text = example.text

if randomize:

# Randomly uppercase/lowercase characters

chars = [c.lower() if random.random() < 0.5 else c.upper() for c in text]

else:

# Uppercase followed by lowercase

chars = [c.lower() if i % 2 else c.upper() for i, c in enumerate(text)]

# Create augmented training example

example_dict = example.to_dict()

doc = nlp.make_doc("".join(chars))

example_dict["token_annotation"]["ORTH"] = [t.text for t in doc]

# Original example followed by augmented example

yield example

yield example.from_dict(doc, example_dict)

return augment

An easy way to create modified Example objects is to use the

Example.from_dict method with a new reference

Doc created from the modified text. In this case, only the

capitalization changes, so only the ORTH values of the tokens will be

different between the original and augmented examples.

Note that if your data augmentation strategy involves changing the tokenization

(for instance, removing or adding tokens) and your training examples include

token-based annotations like the dependency parse or entity labels, you'll need

to take care to adjust the Example object so its annotations match and remain

valid.

Internal training API

spaCy gives you full control over the training loop. However, for most use

cases, it's recommended to train your pipelines via the

spacy train command with a config.cfg to keep

track of your settings and hyperparameters, instead of writing your own training

scripts from scratch. Custom registered functions should

typically give you everything you need to train fully custom pipelines with

spacy train.

Training from a Python script

If you want to run the training from a Python script instead of using the

spacy train CLI command, you can call into the

train helper function directly. It takes the path

to the config file, an optional output directory and an optional dictionary of

config overrides.

from spacy.cli.train import train

train("./config.cfg", overrides={"paths.train": "./train.spacy", "paths.dev": "./dev.spacy"})

Internal training loop API

This section documents how the training loop and updates to the nlp object

work internally. You typically shouldn't have to implement this in Python unless

you're writing your own trainable components. To train a pipeline, use

spacy train or the train helper

function instead.

The Example object contains annotated training data, also

called the gold standard. It's initialized with a Doc object

that will hold the predictions, and another Doc object that holds the

gold-standard annotations. It also includes the alignment between those two

documents if they differ in tokenization. The Example class ensures that spaCy

can rely on one standardized format that's passed through the pipeline. For

instance, let's say we want to define gold-standard part-of-speech tags:

words = ["I", "like", "stuff"]

predicted = Doc(vocab, words=words)

# create the reference Doc with gold-standard TAG annotations

tags = ["NOUN", "VERB", "NOUN"]

tag_ids = [vocab.strings.add(tag) for tag in tags]

reference = Doc(vocab, words=words).from_array("TAG", numpy.array(tag_ids, dtype="uint64"))

example = Example(predicted, reference)

As this is quite verbose, there's an alternative way to create the reference

Doc with the gold-standard annotations. The function Example.from_dict takes

a dictionary with keyword arguments specifying the annotations, like tags or

entities. Using the resulting Example object and its gold-standard

annotations, the model can be updated to learn a sentence of three words with

their assigned part-of-speech tags.

words = ["I", "like", "stuff"]

tags = ["NOUN", "VERB", "NOUN"]

predicted = Doc(nlp.vocab, words=words)

example = Example.from_dict(predicted, {"tags": tags})

Here's another example that shows how to define gold-standard named entities.

The letters added before the labels refer to the tags of the

BILUO scheme – O is a token

outside an entity, U a single entity unit, B the beginning of an entity, I

a token inside an entity and L the last token of an entity.

doc = Doc(nlp.vocab, words=["Facebook", "released", "React", "in", "2014"])

example = Example.from_dict(doc, {"entities": ["U-ORG", "O", "U-TECHNOLOGY", "O", "U-DATE"]})

As of v3.0, the Example object replaces the GoldParse class.

It can be constructed in a very similar way – from a Doc and a dictionary of

annotations. For more details, see the

migration guide.

- gold = GoldParse(doc, entities=entities)

+ example = Example.from_dict(doc, {"entities": entities})

Of course, it's not enough to only show a model a single example once.

Especially if you only have few examples, you'll want to train for a number of

iterations. At each iteration, the training data is shuffled to ensure the

model doesn't make any generalizations based on the order of examples. Another

technique to improve the learning results is to set a dropout rate, a rate

at which to randomly "drop" individual features and representations. This makes

it harder for the model to memorize the training data. For example, a 0.25

dropout means that each feature or internal representation has a 1/4 likelihood

of being dropped.

nlp: Thenlpobject with the pipeline components and their models.nlp.initialize: Initialize the pipeline and return an optimizer to update the component model weights.Optimizer: Function that holds state between updates.nlp.update: Update component models with examples.Example: object holding predictions and gold-standard annotations.nlp.to_disk: Save the updated pipeline to a directory.

### Example training loop

optimizer = nlp.initialize()

for itn in range(100):

random.shuffle(train_data)

for raw_text, entity_offsets in train_data:

doc = nlp.make_doc(raw_text)

example = Example.from_dict(doc, {"entities": entity_offsets})

nlp.update([example], sgd=optimizer)

nlp.to_disk("/output")

The nlp.update method takes the following arguments:

| Name | Description |

|---|---|

examples |

Example objects. The update method takes a sequence of them, so you can batch up your training examples. |

drop |

Dropout rate. Makes it harder for the model to just memorize the data. |

sgd |

An Optimizer object, which updates the model's weights. If not set, spaCy will create a new one and save it for further use. |

As of v3.0, the Example object replaces the GoldParse class

and the "simple training style" of calling nlp.update with a text and a

dictionary of annotations. Updating your code to use the Example object should

be very straightforward: you can call

Example.from_dict with a Doc and the

dictionary of annotations:

text = "Facebook released React in 2014"

annotations = {"entities": ["U-ORG", "O", "U-TECHNOLOGY", "O", "U-DATE"]}

+ example = Example.from_dict(nlp.make_doc(text), annotations)

- nlp.update([text], [annotations])

+ nlp.update([example])