73 KiB

| title | teaser | menu | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Rule-based matching | Find phrases and tokens, and match entities |

|

Compared to using regular expressions on raw text, spaCy's rule-based matcher

engines and components not only let you find the words and phrases you're

looking for – they also give you access to the tokens within the document and

their relationships. This means you can easily access and analyze the

surrounding tokens, merge spans into single tokens or add entries to the named

entities in doc.ents.

For complex tasks, it's usually better to train a statistical entity recognition model. However, statistical models require training data, so for many situations, rule-based approaches are more practical. This is especially true at the start of a project: you can use a rule-based approach as part of a data collection process, to help you "bootstrap" a statistical model.

Training a model is useful if you have some examples and you want your system to be able to generalize based on those examples. It works especially well if there are clues in the local context. For instance, if you're trying to detect person or company names, your application may benefit from a statistical named entity recognition model.

Rule-based systems are a good choice if there's a more or less finite number of examples that you want to find in the data, or if there's a very clear, structured pattern you can express with token rules or regular expressions. For instance, country names, IP addresses or URLs are things you might be able to handle well with a purely rule-based approach.

You can also combine both approaches and improve a statistical model with rules to handle very specific cases and boost accuracy. For details, see the section on rule-based entity recognition.

The PhraseMatcher is useful if you already have a large terminology list or

gazetteer consisting of single or multi-token phrases that you want to find

exact instances of in your data. As of spaCy v2.1.0, you can also match on the

LOWER attribute for fast and case-insensitive matching.

The Matcher isn't as blazing fast as the PhraseMatcher, since it compares

across individual token attributes. However, it allows you to write very

abstract representations of the tokens you're looking for, using lexical

attributes, linguistic features predicted by the model, operators, set

membership and rich comparison. For example, you can find a noun, followed by a

verb with the lemma "love" or "like", followed by an optional determiner and

another token that's at least 10 characters long.

Token-based matching

spaCy features a rule-matching engine, the Matcher, that

operates over tokens, similar to regular expressions. The rules can refer to

token annotations (e.g. the token text or tag_, and flags (e.g. IS_PUNCT).

The rule matcher also lets you pass in a custom callback to act on matches – for

example, to merge entities and apply custom labels. You can also associate

patterns with entity IDs, to allow some basic entity linking or disambiguation.

To match large terminology lists, you can use the

PhraseMatcher, which accepts Doc objects as match

patterns.

Adding patterns

Let's say we want to enable spaCy to find a combination of three tokens:

- A token whose lowercase form matches "hello", e.g. "Hello" or "HELLO".

- A token whose

is_punctflag is set toTrue, i.e. any punctuation. - A token whose lowercase form matches "world", e.g. "World" or "WORLD".

[{"LOWER": "hello"}, {"IS_PUNCT": True}, {"LOWER": "world"}]

When writing patterns, keep in mind that each dictionary represents one token. If spaCy's tokenization doesn't match the tokens defined in a pattern, the pattern is not going to produce any results. When developing complex patterns, make sure to check examples against spaCy's tokenization:

doc = nlp("A complex-example,!")

print([token.text for token in doc])

First, we initialize the Matcher with a vocab. The matcher must always share

the same vocab with the documents it will operate on. We can now call

matcher.add() with an ID and a list of patterns.

### {executable="true"}

import spacy

from spacy.matcher import Matcher

nlp = spacy.load("en_core_web_sm")

matcher = Matcher(nlp.vocab)

# Add match ID "HelloWorld" with no callback and one pattern

pattern = [{"LOWER": "hello"}, {"IS_PUNCT": True}, {"LOWER": "world"}]

matcher.add("HelloWorld", [pattern])

doc = nlp("Hello, world! Hello world!")

matches = matcher(doc)

for match_id, start, end in matches:

string_id = nlp.vocab.strings[match_id] # Get string representation

span = doc[start:end] # The matched span

print(match_id, string_id, start, end, span.text)

The matcher returns a list of (match_id, start, end) tuples – in this case,

[('15578876784678163569', 0, 3)], which maps to the span doc[0:3] of our

original document. The match_id is the hash value of

the string ID "HelloWorld". To get the string value, you can look up the ID in

the StringStore.

for match_id, start, end in matches:

string_id = nlp.vocab.strings[match_id] # 'HelloWorld'

span = doc[start:end] # The matched span

Optionally, we could also choose to add more than one pattern, for example to also match sequences without punctuation between "hello" and "world":

patterns = [

[{"LOWER": "hello"}, {"IS_PUNCT": True}, {"LOWER": "world"}],

[{"LOWER": "hello"}, {"LOWER": "world"}]

]

matcher.add("HelloWorld", patterns)

By default, the matcher will only return the matches and not do anything

else, like merge entities or assign labels. This is all up to you and can be

defined individually for each pattern, by passing in a callback function as the

on_match argument on add(). This is useful, because it lets you write

entirely custom and pattern-specific logic. For example, you might want to

merge some patterns into one token, while adding entity labels for other

pattern types. You shouldn't have to create different matchers for each of those

processes.

Available token attributes

The available token pattern keys correspond to a number of

Token attributes. The supported attributes for

rule-based matching are:

| Attribute | Description |

|---|---|

ORTH |

The exact verbatim text of a token. |

TEXT 2.1 |

The exact verbatim text of a token. |

LOWER |

The lowercase form of the token text. |

LENGTH |

The length of the token text. |

IS_ALPHA, IS_ASCII, IS_DIGIT |

Token text consists of alphabetic characters, ASCII characters, digits. |

IS_LOWER, IS_UPPER, IS_TITLE |

Token text is in lowercase, uppercase, titlecase. |

IS_PUNCT, IS_SPACE, IS_STOP |

Token is punctuation, whitespace, stop word. |

IS_SENT_START |

Token is start of sentence. |

LIKE_NUM, LIKE_URL, LIKE_EMAIL |

Token text resembles a number, URL, email. |

POS, TAG, MORPH, DEP, LEMMA, SHAPE |

The token's simple and extended part-of-speech tag, morphological analysis, dependency label, lemma, shape. |

ENT_TYPE |

The token's entity label. |

_ 2.1 |

Properties in custom extension attributes. |

OP |

Operator or quantifier to determine how often to match a token pattern. |

No, it shouldn't. spaCy will normalize the names internally and

{"LOWER": "text"} and {"lower": "text"} will both produce the same result.

Using the uppercase version is mostly a convention to make it clear that the

attributes are "special" and don't exactly map to the token attributes like

Token.lower and Token.lower_.

spaCy can't provide access to all of the attributes because the Matcher loops

over the Cython data, not the Python objects. Inside the matcher, we're dealing

with a TokenC struct – we don't have an instance

of Token. This means that all of the attributes that refer to

computed properties can't be accessed.

The uppercase attribute names like LOWER or IS_PUNCT refer to symbols from

the spacy.attrs enum table. They're passed

into a function that essentially is a big case/switch statement, to figure out

which struct field to return. The same attribute identifiers are used in

Doc.to_array, and a few other places in the code where

you need to describe fields like this.

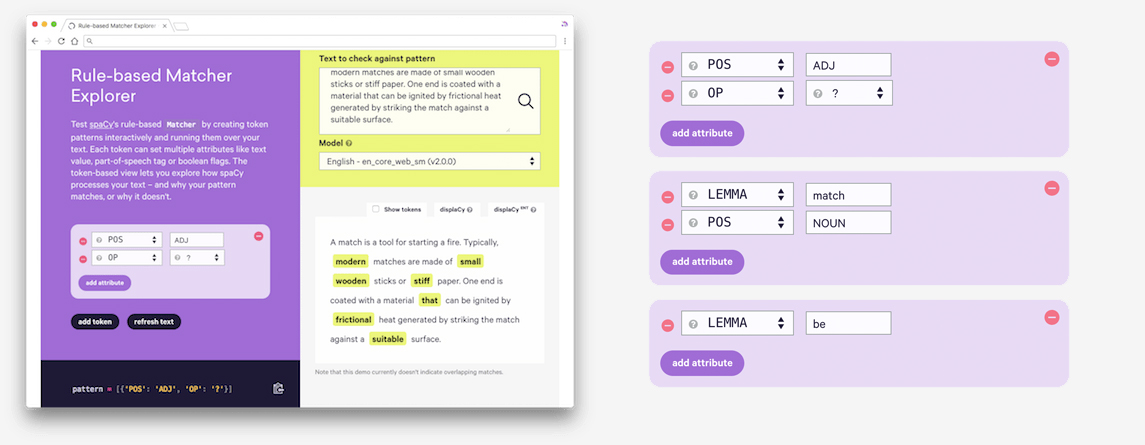

The Matcher Explorer lets you test the

rule-based Matcher by creating token patterns interactively and running them

over your text. Each token can set multiple attributes like text value,

part-of-speech tag or boolean flags. The token-based view lets you explore how

spaCy processes your text – and why your pattern matches, or why it doesn't.

Extended pattern syntax and attributes

Instead of mapping to a single value, token patterns can also map to a dictionary of properties. For example, to specify that the value of a lemma should be part of a list of values, or to set a minimum character length. The following rich comparison attributes are available:

Example

# Matches "love cats" or "likes flowers" pattern1 = [{"LEMMA": {"IN": ["like", "love"]}}, {"POS": "NOUN"}] # Matches tokens of length >= 10 pattern2 = [{"LENGTH": {">=": 10}}]

| Attribute | Description |

|---|---|

IN |

Attribute value is member of a list. |

NOT_IN |

Attribute value is not member of a list. |

ISSUBSET |

Attribute values (for MORPH) are a subset of a list. |

ISSUPERSET |

Attribute values (for MORPH) are a superset of a list. |

==, >=, <=, >, < |

Attribute value is equal, greater or equal, smaller or equal, greater or smaller. |

Regular expressions

In some cases, only matching tokens and token attributes isn't enough – for example, you might want to match different spellings of a word, without having to add a new pattern for each spelling.

pattern = [{"TEXT": {"REGEX": "^[Uu](\\.?|nited)$"}},

{"TEXT": {"REGEX": "^[Ss](\\.?|tates)$"}},

{"LOWER": "president"}]

The REGEX operator allows defining rules for any attribute string value,

including custom attributes. It always needs to be applied to an attribute like

TEXT, LOWER or TAG:

# Match different spellings of token texts

pattern = [{"TEXT": {"REGEX": "deff?in[ia]tely"}}]

# Match tokens with fine-grained POS tags starting with 'V'

pattern = [{"TAG": {"REGEX": "^V"}}]

# Match custom attribute values with regular expressions

pattern = [{"_": {"country": {"REGEX": "^[Uu](nited|\\.?) ?[Ss](tates|\\.?)$"}}}]

When using the REGEX operator, keep in mind that it operates on single

tokens, not the whole text. Each expression you provide will be matched on a

token. If you need to match on the whole text instead, see the details on

regex matching on the whole text.

Matching regular expressions on the full text

If your expressions apply to multiple tokens, a simple solution is to match on

the doc.text with re.finditer and use the

Doc.char_span method to create a Span from the

character indices of the match. If the matched characters don't map to one or

more valid tokens, Doc.char_span returns None.

What's a valid token sequence?

In the example, the expression will also match

"US"in"USA". However,"USA"is a single token andSpanobjects are sequences of tokens. So"US"cannot be its own span, because it does not end on a token boundary.

### {executable="true"}

import spacy

import re

nlp = spacy.load("en_core_web_sm")

doc = nlp("The United States of America (USA) are commonly known as the United States (U.S. or US) or America.")

expression = r"[Uu](nited|\\.?) ?[Ss](tates|\\.?)"

for match in re.finditer(expression, doc.text):

start, end = match.span()

span = doc.char_span(start, end)

# This is a Span object or None if match doesn't map to valid token sequence

if span is not None:

print("Found match:", span.text)

In some cases, you might want to expand the match to the closest token

boundaries, so you can create a Span for "USA", even though only the

substring "US" is matched. You can calculate this using the character offsets

of the tokens in the document, available as

Token.idx. This lets you create a list of valid token

start and end boundaries and leaves you with a rather basic algorithmic problem:

Given a number, find the next lowest (start token) or the next highest (end

token) number that's part of a given list of numbers. This will be the closest

valid token boundary.

There are many ways to do this and the most straightforward one is to create a

dict keyed by characters in the Doc, mapped to the token they're part of. It's

easy to write and less error-prone, and gives you a constant lookup time: you

only ever need to create the dict once per Doc.

chars_to_tokens = {}

for token in doc:

for i in range(token.idx, token.idx + len(token.text)):

chars_to_tokens[i] = token.i

You can then look up character at a given position, and get the index of the

corresponding token that the character is part of. Your span would then be

doc[token_start:token_end]. If a character isn't in the dict, it means it's

the (white)space tokens are split on. That hopefully shouldn't happen, though,

because it'd mean your regex is producing matches with leading or trailing

whitespace.

### {highlight="5-8"}

span = doc.char_span(start, end)

if span is not None:

print("Found match:", span.text)

else:

start_token = chars_to_tokens.get(start)

end_token = chars_to_tokens.get(end)

if start_token is not None and end_token is not None:

span = doc[start_token:end_token + 1]

print("Found closest match:", span.text)

Operators and quantifiers

The matcher also lets you use quantifiers, specified as the 'OP' key.

Quantifiers let you define sequences of tokens to be matched, e.g. one or more

punctuation marks, or specify optional tokens. Note that there are no nested or

scoped quantifiers – instead, you can build those behaviors with on_match

callbacks.

| OP | Description |

|---|---|

! |

Negate the pattern, by requiring it to match exactly 0 times. |

? |

Make the pattern optional, by allowing it to match 0 or 1 times. |

+ |

Require the pattern to match 1 or more times. |

* |

Allow the pattern to match zero or more times. |

Example

pattern = [{"LOWER": "hello"}, {"IS_PUNCT": True, "OP": "?"}]

In versions before v2.1.0, the semantics of the + and * operators behave

inconsistently. They were usually interpreted "greedily", i.e. longer matches

are returned where possible. However, if you specify two + and * patterns in

a row and their matches overlap, the first operator will behave non-greedily.

This quirk in the semantics is corrected in spaCy v2.1.0.

Using wildcard token patterns

While the token attributes offer many options to write highly specific patterns,

you can also use an empty dictionary, {} as a wildcard representing any

token. This is useful if you know the context of what you're trying to match,

but very little about the specific token and its characters. For example, let's

say you're trying to extract people's user names from your data. All you know is

that they are listed as "User name: {username}". The name itself may contain any

character, but no whitespace – so you'll know it will be handled as one token.

[{"ORTH": "User"}, {"ORTH": "name"}, {"ORTH": ":"}, {}]

Validating and debugging patterns

The Matcher can validate patterns against a JSON schema with the option

validate=True. This is useful for debugging patterns during development, in

particular for catching unsupported attributes.

### {executable="true"}

import spacy

from spacy.matcher import Matcher

nlp = spacy.load("en_core_web_sm")

matcher = Matcher(nlp.vocab, validate=True)

# Add match ID "HelloWorld" with unsupported attribute CASEINSENSITIVE

pattern = [{"LOWER": "hello"}, {"IS_PUNCT": True}, {"CASEINSENSITIVE": "world"}]

matcher.add("HelloWorld", [pattern])

# 🚨 Raises an error:

# MatchPatternError: Invalid token patterns for matcher rule 'HelloWorld'

# Pattern 0:

# - Additional properties are not allowed ('CASEINSENSITIVE' was unexpected) [2]

Adding on_match rules

To move on to a more realistic example, let's say you're working with a large

corpus of blog articles, and you want to match all mentions of "Google I/O"

(which spaCy tokenizes as ['Google', 'I', '/', 'O']). To be safe, you only

match on the uppercase versions, in case someone has written it as "Google i/o".

### {executable="true"}

from spacy.lang.en import English

from spacy.matcher import Matcher

from spacy.tokens import Span

nlp = English()

matcher = Matcher(nlp.vocab)

def add_event_ent(matcher, doc, i, matches):

# Get the current match and create tuple of entity label, start and end.

# Append entity to the doc's entity. (Don't overwrite doc.ents!)

match_id, start, end = matches[i]

entity = Span(doc, start, end, label="EVENT")

doc.ents += (entity,)

print(entity.text)

pattern = [{"ORTH": "Google"}, {"ORTH": "I"}, {"ORTH": "/"}, {"ORTH": "O"}]

matcher.add("GoogleIO", [pattern], on_match=add_event_ent)

doc = nlp("This is a text about Google I/O")

matches = matcher(doc)

A very similar logic has been implemented in the built-in

EntityRuler by the way. It also takes care of handling

overlapping matches, which you would otherwise have to take care of yourself.

Tip: Visualizing matches

When working with entities, you can use displaCy to quickly generate a NER visualization from your updated

Doc, which can be exported as an HTML file:from spacy import displacy html = displacy.render(doc, style="ent", page=True, options={"ents": ["EVENT"]})For more info and examples, see the usage guide on visualizing spaCy.

We can now call the matcher on our documents. The patterns will be matched in the order they occur in the text. The matcher will then iterate over the matches, look up the callback for the match ID that was matched, and invoke it.

doc = nlp(YOUR_TEXT_HERE)

matcher(doc)

When the callback is invoked, it is passed four arguments: the matcher itself, the document, the position of the current match, and the total list of matches. This allows you to write callbacks that consider the entire set of matched phrases, so that you can resolve overlaps and other conflicts in whatever way you prefer.

| Argument | Description |

|---|---|

matcher |

The matcher instance. |

doc |

The document the matcher was used on. |

i |

Index of the current match (matches[i]). |

matches |

A list of (match_id, start, end) tuples, describing the matches. A match tuple describes a span doc[start:end]. |

Creating spans from matches

Creating Span objects from the returned matches is a very common

use case. spaCy makes this easy by giving you access to the start and end

token of each match, which you can use to construct a new span with an optional

label. As of spaCy v3.0, you can also set as_spans=True when calling the

matcher on a Doc, which will return a list of Span objects

using the match_id as the span label.

### {executable="true"}

import spacy

from spacy.matcher import Matcher

from spacy.tokens import Span

nlp = spacy.blank("en")

matcher = Matcher(nlp.vocab)

matcher.add("PERSON", [[{"lower": "barack"}, {"lower": "obama"}]])

doc = nlp("Barack Obama was the 44th president of the United States")

# 1. Return (match_id, start, end) tuples

matches = matcher(doc)

for match_id, start, end in matches:

# Create the matched span and assign the match_id as a label

span = Span(doc, start, end, label=match_id)

print(span.text, span.label_)

# 2. Return Span objects directly

matches = matcher(doc, as_spans=True)

for span in matches:

print(span.text, span.label_)

Using custom pipeline components

Let's say your data also contains some annoying pre-processing artifacts, like

leftover HTML line breaks (e.g. <br> or <BR/>). To make your text easier to

analyze, you want to merge those into one token and flag them, to make sure you

can ignore them later. Ideally, this should all be done automatically as you

process the text. You can achieve this by adding a

custom pipeline component

that's called on each Doc object, merges the leftover HTML spans and sets an

attribute bad_html on the token.

### {executable="true"}

import spacy

from spacy.language import Language

from spacy.matcher import Matcher

from spacy.tokens import Token

# We're using a component factory because the component needs to be

# initialized with the shared vocab via the nlp object

@Language.factory("html_merger")

def create_bad_html_merger(nlp, name):

return BadHTMLMerger(nlp.vocab)

class BadHTMLMerger:

def __init__(self, vocab):

patterns = [

[{"ORTH": "<"}, {"LOWER": "br"}, {"ORTH": ">"}],

[{"ORTH": "<"}, {"LOWER": "br/"}, {"ORTH": ">"}],

]

# Register a new token extension to flag bad HTML

Token.set_extension("bad_html", default=False)

self.matcher = Matcher(vocab)

self.matcher.add("BAD_HTML", patterns)

def __call__(self, doc):

# This method is invoked when the component is called on a Doc

matches = self.matcher(doc)

spans = [] # Collect the matched spans here

for match_id, start, end in matches:

spans.append(doc[start:end])

with doc.retokenize() as retokenizer:

for span in spans:

retokenizer.merge(span)

for token in span:

token._.bad_html = True # Mark token as bad HTML

return doc

nlp = spacy.load("en_core_web_sm")

nlp.add_pipe("html_merger", last=True) # Add component to the pipeline

doc = nlp("Hello<br>world! <br/> This is a test.")

for token in doc:

print(token.text, token._.bad_html)

Instead of hard-coding the patterns into the component, you could also make it

take a path to a JSON file containing the patterns. This lets you reuse the

component with different patterns, depending on your application. When adding

the component to the pipeline with nlp.add_pipe, you

can pass in the argument via the config:

@Language.factory("html_merger", default_config={"path": None})

def create_bad_html_merger(nlp, name, path):

return BadHTMLMerger(nlp, path=path)

nlp.add_pipe("html_merger", config={"path": "/path/to/patterns.json"})

For more details and examples of how to create custom pipeline components and extension attributes, see the usage guide.

Example: Using linguistic annotations

Let's say you're analyzing user comments and you want to find out what people are saying about Facebook. You want to start off by finding adjectives following "Facebook is" or "Facebook was". This is obviously a very rudimentary solution, but it'll be fast, and a great way to get an idea for what's in your data. Your pattern could look like this:

[{"LOWER": "facebook"}, {"LEMMA": "be"}, {"POS": "ADV", "OP": "*"}, {"POS": "ADJ"}]

This translates to a token whose lowercase form matches "facebook" (like Facebook, facebook or FACEBOOK), followed by a token with the lemma "be" (for example, is, was, or 's), followed by an optional adverb, followed by an adjective. Using the linguistic annotations here is especially useful, because you can tell spaCy to match "Facebook's annoying", but not "Facebook's annoying ads". The optional adverb makes sure you won't miss adjectives with intensifiers, like "pretty awful" or "very nice".

To get a quick overview of the results, you could collect all sentences

containing a match and render them with the

displaCy visualizer. In the callback function, you'll have

access to the start and end of each match, as well as the parent Doc. This

lets you determine the sentence containing the match, doc[start:end].sent,

and calculate the start and end of the matched span within the sentence. Using

displaCy in "manual" mode lets you pass in a

list of dictionaries containing the text and entities to render.

### {executable="true"}

import spacy

from spacy import displacy

from spacy.matcher import Matcher

nlp = spacy.load("en_core_web_sm")

matcher = Matcher(nlp.vocab)

matched_sents = [] # Collect data of matched sentences to be visualized

def collect_sents(matcher, doc, i, matches):

match_id, start, end = matches[i]

span = doc[start:end] # Matched span

sent = span.sent # Sentence containing matched span

# Append mock entity for match in displaCy style to matched_sents

# get the match span by ofsetting the start and end of the span with the

# start and end of the sentence in the doc

match_ents = [{

"start": span.start_char - sent.start_char,

"end": span.end_char - sent.start_char,

"label": "MATCH",

}]

matched_sents.append({"text": sent.text, "ents": match_ents})

pattern = [{"LOWER": "facebook"}, {"LEMMA": "be"}, {"POS": "ADV", "OP": "*"},

{"POS": "ADJ"}]

matcher.add("FacebookIs", [pattern], on_match=collect_sents) # add pattern

doc = nlp("I'd say that Facebook is evil. – Facebook is pretty cool, right?")

matches = matcher(doc)

# Serve visualization of sentences containing match with displaCy

# set manual=True to make displaCy render straight from a dictionary

# (if you're not running the code within a Jupyer environment, you can

# use displacy.serve instead)

displacy.render(matched_sents, style="ent", manual=True)

Example: Phone numbers

Phone numbers can have many different formats and matching them is often tricky. During tokenization, spaCy will leave sequences of numbers intact and only split on whitespace and punctuation. This means that your match pattern will have to look out for number sequences of a certain length, surrounded by specific punctuation – depending on the national conventions.

The IS_DIGIT flag is not very helpful here, because it doesn't tell us

anything about the length. However, you can use the SHAPE flag, with each d

representing a digit (up to 4 digits / characters):

[{"ORTH": "("}, {"SHAPE": "ddd"}, {"ORTH": ")"}, {"SHAPE": "dddd"},

{"ORTH": "-", "OP": "?"}, {"SHAPE": "dddd"}]

This will match phone numbers of the format (123) 4567 8901 or (123)

4567-8901. To also match formats like (123) 456 789, you can add a second

pattern using 'ddd' in place of 'dddd'. By hard-coding some values, you can

match only certain, country-specific numbers. For example, here's a pattern to

match the most common formats of

international German numbers:

[{"ORTH": "+"}, {"ORTH": "49"}, {"ORTH": "(", "OP": "?"}, {"SHAPE": "dddd"},

{"ORTH": ")", "OP": "?"}, {"SHAPE": "dddd", "LENGTH": 6}]

Depending on the formats your application needs to match, creating an extensive set of rules like this is often better than training a model. It'll produce more predictable results, is much easier to modify and extend, and doesn't require any training data – only a set of test cases.

### {executable="true"}

import spacy

from spacy.matcher import Matcher

nlp = spacy.load("en_core_web_sm")

matcher = Matcher(nlp.vocab)

pattern = [{"ORTH": "("}, {"SHAPE": "ddd"}, {"ORTH": ")"}, {"SHAPE": "ddd"},

{"ORTH": "-", "OP": "?"}, {"SHAPE": "ddd"}]

matcher.add("PHONE_NUMBER", [pattern])

doc = nlp("Call me at (123) 456 789 or (123) 456 789!")

print([t.text for t in doc])

matches = matcher(doc)

for match_id, start, end in matches:

span = doc[start:end]

print(span.text)

Example: Hashtags and emoji on social media

Social media posts, especially tweets, can be difficult to work with. They're very short and often contain various emoji and hashtags. By only looking at the plain text, you'll lose a lot of valuable semantic information.

Let's say you've extracted a large sample of social media posts on a specific

topic, for example posts mentioning a brand name or product. As the first step

of your data exploration, you want to filter out posts containing certain emoji

and use them to assign a general sentiment score, based on whether the expressed

emotion is positive or negative, e.g. 😀 or 😞. You also want to find, merge and

label hashtags like #MondayMotivation, to be able to ignore or analyze them

later.

Note on sentiment analysis

Ultimately, sentiment analysis is not always that easy. In addition to the emoji, you'll also want to take specific words into account and check the

subtreefor intensifiers like "very", to increase the sentiment score. At some point, you might also want to train a sentiment model. However, the approach described in this example is very useful for bootstrapping rules to collect training data. It's also an incredibly fast way to gather first insights into your data – with about 1 million tweets, you'd be looking at a processing time of under 1 minute.

By default, spaCy's tokenizer will split emoji into separate tokens. This means

that you can create a pattern for one or more emoji tokens. Valid hashtags

usually consist of a #, plus a sequence of ASCII characters with no

whitespace, making them easy to match as well.

### {executable="true"}

from spacy.lang.en import English

from spacy.matcher import Matcher

nlp = English() # We only want the tokenizer, so no need to load a pipeline

matcher = Matcher(nlp.vocab)

pos_emoji = ["😀", "😃", "😂", "🤣", "😊", "😍"] # Positive emoji

neg_emoji = ["😞", "😠", "😩", "😢", "😭", "😒"] # Negative emoji

# Add patterns to match one or more emoji tokens

pos_patterns = [[{"ORTH": emoji}] for emoji in pos_emoji]

neg_patterns = [[{"ORTH": emoji}] for emoji in neg_emoji]

# Function to label the sentiment

def label_sentiment(matcher, doc, i, matches):

match_id, start, end = matches[i]

if doc.vocab.strings[match_id] == "HAPPY": # Don't forget to get string!

doc.sentiment += 0.1 # Add 0.1 for positive sentiment

elif doc.vocab.strings[match_id] == "SAD":

doc.sentiment -= 0.1 # Subtract 0.1 for negative sentiment

matcher.add("HAPPY", pos_patterns, on_match=label_sentiment) # Add positive pattern

matcher.add("SAD", neg_patterns, on_match=label_sentiment) # Add negative pattern

# Add pattern for valid hashtag, i.e. '#' plus any ASCII token

matcher.add("HASHTAG", [[{"ORTH": "#"}, {"IS_ASCII": True}]])

doc = nlp("Hello world 😀 #MondayMotivation")

matches = matcher(doc)

for match_id, start, end in matches:

string_id = doc.vocab.strings[match_id] # Look up string ID

span = doc[start:end]

print(string_id, span.text)

Because the on_match callback receives the ID of each match, you can use the

same function to handle the sentiment assignment for both the positive and

negative pattern. To keep it simple, we'll either add or subtract 0.1 points –

this way, the score will also reflect combinations of emoji, even positive and

negative ones.

With a library like Emojipedia,

we can also retrieve a short description for each emoji – for example, 😍's

official title is "Smiling Face With Heart-Eyes". Assigning it to a

custom attribute on

the emoji span will make it available as span._.emoji_desc.

from emojipedia import Emojipedia # Installation: pip install emojipedia

from spacy.tokens import Span # Get the global Span object

Span.set_extension("emoji_desc", default=None) # Register the custom attribute

def label_sentiment(matcher, doc, i, matches):

match_id, start, end = matches[i]

if doc.vocab.strings[match_id] == "HAPPY": # Don't forget to get string!

doc.sentiment += 0.1 # Add 0.1 for positive sentiment

elif doc.vocab.strings[match_id] == "SAD":

doc.sentiment -= 0.1 # Subtract 0.1 for negative sentiment

span = doc[start:end]

emoji = Emojipedia.search(span[0].text) # Get data for emoji

span._.emoji_desc = emoji.title # Assign emoji description

To label the hashtags, we can use a custom attribute set on the respective token:

### {executable="true"}

import spacy

from spacy.matcher import Matcher

from spacy.tokens import Token

nlp = spacy.load("en_core_web_sm")

matcher = Matcher(nlp.vocab)

# Add pattern for valid hashtag, i.e. '#' plus any ASCII token

matcher.add("HASHTAG", [[{"ORTH": "#"}, {"IS_ASCII": True}]])

# Register token extension

Token.set_extension("is_hashtag", default=False)

doc = nlp("Hello world 😀 #MondayMotivation")

matches = matcher(doc)

hashtags = []

for match_id, start, end in matches:

if doc.vocab.strings[match_id] == "HASHTAG":

hashtags.append(doc[start:end])

with doc.retokenize() as retokenizer:

for span in hashtags:

retokenizer.merge(span)

for token in span:

token._.is_hashtag = True

for token in doc:

print(token.text, token._.is_hashtag)

Efficient phrase matching

If you need to match large terminology lists, you can also use the

PhraseMatcher and create Doc objects

instead of token patterns, which is much more efficient overall. The Doc

patterns can contain single or multiple tokens.

Adding phrase patterns

### {executable="true"}

import spacy

from spacy.matcher import PhraseMatcher

nlp = spacy.load("en_core_web_sm")

matcher = PhraseMatcher(nlp.vocab)

terms = ["Barack Obama", "Angela Merkel", "Washington, D.C."]

# Only run nlp.make_doc to speed things up

patterns = [nlp.make_doc(text) for text in terms]

matcher.add("TerminologyList", patterns)

doc = nlp("German Chancellor Angela Merkel and US President Barack Obama "

"converse in the Oval Office inside the White House in Washington, D.C.")

matches = matcher(doc)

for match_id, start, end in matches:

span = doc[start:end]

print(span.text)

Since spaCy is used for processing both the patterns and the text to be matched,

you won't have to worry about specific tokenization – for example, you can

simply pass in nlp("Washington, D.C.") and won't have to write a complex token

pattern covering the exact tokenization of the term.

To create the patterns, each phrase has to be processed with the nlp object.

If you have a trained pipeline loaded, doing this in a loop or list

comprehension can easily become inefficient and slow. If you only need the

tokenization and lexical attributes, you can run

nlp.make_doc instead, which will only run the

tokenizer. For an additional speed boost, you can also use the

nlp.tokenizer.pipe method, which will process the texts

as a stream.

- patterns = [nlp(term) for term in LOTS_OF_TERMS]

+ patterns = [nlp.make_doc(term) for term in LOTS_OF_TERMS]

+ patterns = list(nlp.tokenizer.pipe(LOTS_OF_TERMS))

Matching on other token attributes

By default, the PhraseMatcher will match on the verbatim token text, e.g.

Token.text. By setting the attr argument on initialization, you can change

which token attribute the matcher should use when comparing the phrase

pattern to the matched Doc. For example, using the attribute LOWER lets you

match on Token.lower and create case-insensitive match patterns:

### {executable="true"}

from spacy.lang.en import English

from spacy.matcher import PhraseMatcher

nlp = English()

matcher = PhraseMatcher(nlp.vocab, attr="LOWER")

patterns = [nlp.make_doc(name) for name in ["Angela Merkel", "Barack Obama"]]

matcher.add("Names", patterns)

doc = nlp("angela merkel and us president barack Obama")

for match_id, start, end in matcher(doc):

print("Matched based on lowercase token text:", doc[start:end])

The examples here use nlp.make_doc to create Doc

object patterns as efficiently as possible and without running any of the other

pipeline components. If the token attribute you want to match on are set by a

pipeline component, make sure that the pipeline component runs when you

create the pattern. For example, to match on POS or LEMMA, the pattern Doc

objects need to have part-of-speech tags set by the tagger or morphologizer.

You can either call the nlp object on your pattern texts instead of

nlp.make_doc, or use nlp.select_pipes to

disable components selectively.

Another possible use case is matching number tokens like IP addresses based on

their shape. This means that you won't have to worry about how those string will

be tokenized and you'll be able to find tokens and combinations of tokens based

on a few examples. Here, we're matching on the shapes ddd.d.d.d and

ddd.ddd.d.d:

### {executable="true"}

from spacy.lang.en import English

from spacy.matcher import PhraseMatcher

nlp = English()

matcher = PhraseMatcher(nlp.vocab, attr="SHAPE")

matcher.add("IP", [nlp("127.0.0.1"), nlp("127.127.0.0")])

doc = nlp("Often the router will have an IP address such as 192.168.1.1 or 192.168.2.1.")

for match_id, start, end in matcher(doc):

print("Matched based on token shape:", doc[start:end])

In theory, the same also works for attributes like POS. For example, a pattern

nlp("I like cats") matched based on its part-of-speech tag would return a

match for "I love dogs". You could also match on boolean flags like IS_PUNCT

to match phrases with the same sequence of punctuation and non-punctuation

tokens as the pattern. But this can easily get confusing and doesn't have much

of an advantage over writing one or two token patterns.

Dependency Matcher

The DependencyMatcher lets you match patterns within

the dependency parse using

Semgrex

operators. It requires a model containing a parser such as the

DependencyParser. Instead of defining a list of

adjacent tokens as in Matcher patterns, the DependencyMatcher patterns match

tokens in the dependency parse and specify the relations between them.

### Example from spacy.matcher import DependencyMatcher # "[subject] ... initially founded" pattern = [ # anchor token: founded { "RIGHT_ID": "founded", "RIGHT_ATTRS": {"ORTH": "founded"} }, # founded -> subject { "LEFT_ID": "founded", "REL_OP": ">", "RIGHT_ID": "subject", "RIGHT_ATTRS": {"DEP": "nsubj"} }, # "founded" follows "initially" { "LEFT_ID": "founded", "REL_OP": ";", "RIGHT_ID": "initially", "RIGHT_ATTRS": {"ORTH": "initially"} } ] matcher = DependencyMatcher(nlp.vocab) matcher.add("FOUNDED", [pattern]) matches = matcher(doc)

A pattern added to the dependency matcher consists of a list of

dictionaries, with each dictionary describing a token to match and its

relation to an existing token in the pattern. Except for the first

dictionary, which defines an anchor token using only RIGHT_ID and

RIGHT_ATTRS, each pattern should have the following keys:

| Name | Description |

|---|---|

LEFT_ID |

The name of the left-hand node in the relation, which has been defined in an earlier node. |

REL_OP |

An operator that describes how the two nodes are related. |

RIGHT_ID |

A unique name for the right-hand node in the relation. |

RIGHT_ATTRS |

The token attributes to match for the right-hand node in the same format as patterns provided to the regular token-based Matcher. |

Each additional token added to the pattern is linked to an existing token

LEFT_ID by the relation REL_OP. The new token is given the name RIGHT_ID

and described by the attributes RIGHT_ATTRS.

Because the unique token names in LEFT_ID and RIGHT_ID are used to

identify tokens, the order of the dicts in the patterns is important: a token

name needs to be defined as RIGHT_ID in one dict in the pattern before it

can be used as LEFT_ID in another dict.

Dependency matcher operators

The following operators are supported by the DependencyMatcher, most of which

come directly from

Semgrex:

| Symbol | Description |

|---|---|

A < B |

A is the immediate dependent of B. |

A > B |

A is the immediate head of B. |

A << B |

A is the dependent in a chain to B following dep → head paths. |

A >> B |

A is the head in a chain to B following head → dep paths. |

A . B |

A immediately precedes B, i.e. A.i == B.i - 1, and both are within the same dependency tree. |

A .* B |

A precedes B, i.e. A.i < B.i, and both are within the same dependency tree (not in Semgrex). |

A ; B |

A immediately follows B, i.e. A.i == B.i + 1, and both are within the same dependency tree (not in Semgrex). |

A ;* B |

A follows B, i.e. A.i > B.i, and both are within the same dependency tree (not in Semgrex). |

A $+ B |

B is a right immediate sibling of A, i.e. A and B have the same parent and A.i == B.i - 1. |

A $- B |

B is a left immediate sibling of A, i.e. A and B have the same parent and A.i == B.i + 1. |

A $++ B |

B is a right sibling of A, i.e. A and B have the same parent and A.i < B.i. |

A $-- B |

B is a left sibling of A, i.e. A and B have the same parent and A.i > B.i. |

Designing dependency matcher patterns

Let's say we want to find sentences describing who founded what kind of company:

- Smith founded a healthcare company in 2005.

- Williams initially founded an insurance company in 1987.

- Lee, an experienced CEO, has founded two AI startups.

The dependency parse for "Smith founded a healthcare company" shows types of relations and tokens we want to match:

Visualizing the parse

The

displacyvisualizer lets you renderDocobjects and their dependency parse and part-of-speech tags:import spacy from spacy import displacy nlp = spacy.load("en_core_web_sm") doc = nlp("Smith founded a healthcare company") displacy.serve(doc)

import DisplaCyDepFoundedHtml from 'images/displacy-dep-founded.html'