20 KiB

| title | next | menu | ||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Training Models | /usage/projects |

|

Introduction to training models

import Training101 from 'usage/101/_training.md'

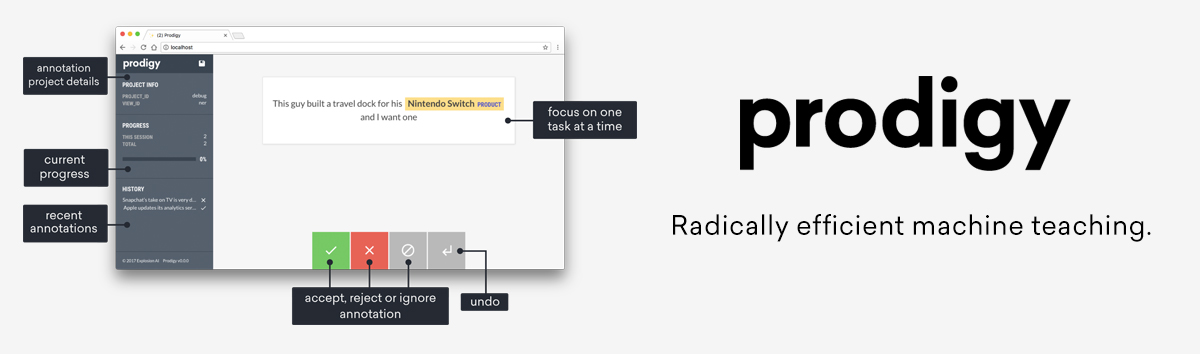

If you need to label a lot of data, check out Prodigy, a new, active learning-powered annotation tool we've developed. Prodigy is fast and extensible, and comes with a modern web application that helps you collect training data faster. It integrates seamlessly with spaCy, pre-selects the most relevant examples for annotation, and lets you train and evaluate ready-to-use spaCy models.

Training CLI & config

The recommended way to train your spaCy models is via the

spacy train command on the command line.

- The training and evaluation data in spaCy's

binary

.spacyformat created usingspacy convert. - A

config.cfgconfiguration file with all settings and hyperparameters. - An optional Python file to register custom models and architectures.

$ python -m spacy train train.spacy dev.spacy config.cfg --output ./output

Tip: Debug your data

The

debug-datacommand lets you analyze and validate your training and development data, get useful stats, and find problems like invalid entity annotations, cyclic dependencies, low data labels and more.$ python -m spacy debug-data en train.spacy dev.spacy --verbose

The easiest way to get started with an end-to-end training process is to clone a project template. Projects let you manage multi-step workflows, from data preprocessing to training and packaging your model.

When you train a model using the spacy train command, you'll

see a table showing metrics after each pass over the data. Here's what those

metrics means:

| Name | Description |

|---|---|

Dep Loss |

Training loss for dependency parser. Should decrease, but usually not to 0. |

NER Loss |

Training loss for named entity recognizer. Should decrease, but usually not to 0. |

UAS |

Unlabeled attachment score for parser. The percentage of unlabeled correct arcs. Should increase. |

NER P. |

NER precision on development data. Should increase. |

NER R. |

NER recall on development data. Should increase. |

NER F. |

NER F-score on development data. Should increase. |

Tag % |

Fine-grained part-of-speech tag accuracy on development data. Should increase. |

Token % |

Tokenization accuracy on development data. |

CPU WPS |

Prediction speed on CPU in words per second, if available. Should stay stable. |

GPU WPS |

Prediction speed on GPU in words per second, if available. Should stay stable. |

Note that if the development data has raw text, some of the gold-standard entities might not align to the predicted tokenization. These tokenization errors are excluded from the NER evaluation. If your tokenization makes it impossible for the model to predict 50% of your entities, your NER F-score might still look good.

Training config files

Migration from spaCy v2.x

TODO: once we have an answer for how to update the training command (

spacy migrate?), add details here

Training config files include all settings and hyperparameters for training

your model. Instead of providing lots of arguments on the command line, you only

need to pass your config.cfg file to spacy train. Under

the hood, the training config uses the

configuration system provided by our

machine learning library Thinc. This also makes it easy to

integrate custom models and architectures, written in your framework of choice.

Some of the main advantages and features of spaCy's training config are:

- Structured sections. The config is grouped into sections, and nested

sections are defined using the

.notation. For example,[nlp.pipeline.ner]defines the settings for the pipeline's named entity recognizer. The config can be loaded as a Python dict. - References to registered functions. Sections can refer to registered functions like model architectures, optimizers or schedules and define arguments that are passed into them. You can also register your own functions to define custom architectures, reference them in your config and tweak their parameters.

- Interpolation. If you have hyperparameters used by multiple components, define them once and reference them as variables.

- Reproducibility with no hidden defaults. The config file is the "single source of truth" and includes all settings.

- Automated checks and validation. When you load a config, spaCy checks if the settings are complete and if all values have the correct types. This lets you catch potential mistakes early. In your custom architectures, you can use Python type hints to tell the config which types of data to expect.

[training]

use_gpu = -1

limit = 0

dropout = 0.2

patience = 1000

eval_frequency = 20

scores = ["ents_p", "ents_r", "ents_f"]

score_weights = {"ents_f": 1}

orth_variant_level = 0.0

gold_preproc = false

max_length = 0

seed = 0

accumulate_gradient = 1

discard_oversize = false

[training.batch_size]

@schedules = "compounding.v1"

start = 100

stop = 1000

compound = 1.001

[training.optimizer]

@optimizers = "Adam.v1"

learn_rate = 0.001

beta1 = 0.9

beta2 = 0.999

use_averages = false

[nlp]

lang = "en"

vectors = null

[nlp.pipeline.ner]

factory = "ner"

[nlp.pipeline.ner.model]

@architectures = "spacy.TransitionBasedParser.v1"

nr_feature_tokens = 3

hidden_width = 128

maxout_pieces = 3

use_upper = true

[nlp.pipeline.ner.model.tok2vec]

@architectures = "spacy.HashEmbedCNN.v1"

width = 128

depth = 4

embed_size = 7000

maxout_pieces = 3

window_size = 1

subword_features = true

pretrained_vectors = null

dropout = null

For a full overview of spaCy's config format and settings, see the training format documentation. The settings available for the different architectures are documented with the model architectures API. See the Thinc documentation for optimizers and schedules.

Using registered functions

The training configuration defined in the config file doesn't have to only

consist of static values. Some settings can also be functions. For instance,

the batch_size can be a number that doesn't change, or a schedule, like a

sequence of compounding values, which has shown to be an effective trick (see

Smith et al., 2017).

### With static value

[training]

batch_size = 128

To refer to a function instead, you can make [training.batch_size] its own

section and use the @ syntax specify the function and its arguments – in this

case compounding.v1 defined

in the function registry. All other values defined in

the block are passed to the function as keyword arguments when it's initialized.

You can also use this mechanism to register

custom implementations and architectures and reference them

from your configs.

TODO

TODO: something about how the tree is built bottom-up?

### With registered function

[training.batch_size]

@schedules = "compounding.v1"

start = 100

stop = 1000

compound = 1.001

Model architectures

Transfer learning

Using transformer models like BERT

Try out a BERT-based model pipeline using this project template: swap in your data, edit the settings and hyperparameters and train, evaluate, package and visualize your model.

Pretraining with spaCy

Custom model implementations and architectures

Training with custom code

The spacy train recipe lets you specify an optional argument

--code that points to a Python file. The file is imported before training and

allows you to add custom functions and architectures to the function registry

that can then be referenced from your config.cfg. This lets you train spaCy

models with custom components, without having to re-implement the whole training

workflow.

For example, let's say you've implemented your own batch size schedule to use

during training. The @spacy.registry.schedules decorator lets you register

that function in the schedules registry and assign

it a string name:

Why the version in the name?

A big benefit of the config system is that it makes your experiments reproducible. We recommend versioning the functions you register, especially if you expect them to change (like a new model architecture). This way, you know that a config referencing

v1means a different function than a config referencingv2.

### functions.py

import spacy

@spacy.registry.schedules("my_custom_schedule.v1")

def my_custom_schedule(start: int = 1, factor: int = 1.001):

while True:

yield start

start = start * factor

In your config, you can now reference the schedule in the

[training.batch_size] block via @schedules. If a block contains a key

starting with an @, it's interpreted as a reference to a function. All other

settings in the block will be passed to the function as keyword arguments. Keep

in mind that the config shouldn't have any hidden defaults and all arguments on

the functions need to be represented in the config.

### config.cfg (excerpt)

[training.batch_size]

@schedules = "my_custom_schedule.v1"

start = 2

factor = 1.005

You can now run spacy train with the config.cfg and your

custom functions.py as the argument --code. Before loading the config, spaCy

will import the functions.py module and your custom functions will be

registered.

### Training with custom code {wrap="true"}

python -m spacy train train.spacy dev.spacy config.cfg --output ./output --code ./functions.py

spaCy's configs are powered by our machine learning library Thinc's

configuration system, which supports

type hints and even

advanced type annotations

using pydantic. If your registered

function provides For example, start: int in the example above will ensure

that the value received as the argument start is an integer. If the value

can't be cast to an integer, spaCy will raise an error.

start: pydantic.StrictInt will force the value to be an integer and raise an

error if it's not – for instance, if your config defines a float.

Defining custom architectures

Wrapping PyTorch and TensorFlow

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Phasellus interdum sodales lectus, ut sodales orci ullamcorper id. Sed condimentum neque ut erat mattis pretium.

Parallel Training with Ray

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Phasellus interdum sodales lectus, ut sodales orci ullamcorper id. Sed condimentum neque ut erat mattis pretium.

Internal training API

spaCy gives you full control over the training loop. However, for most use

cases, it's recommended to train your models via the

spacy train command with a config.cfg to keep

track of your settings and hyperparameters, instead of writing your own training

scripts from scratch.

The Example object contains annotated training data, also

called the gold standard. It's initialized with a Doc object

that will hold the predictions, and another Doc object that holds the

gold-standard annotations. Here's an example of a simple Example for

part-of-speech tags:

words = ["I", "like", "stuff"]

predicted = Doc(vocab, words=words)

# create the reference Doc with gold-standard TAG annotations

tags = ["NOUN", "VERB", "NOUN"]

tag_ids = [vocab.strings.add(tag) for tag in tags]

reference = Doc(vocab, words=words).from_array("TAG", numpy.array(tag_ids, dtype="uint64"))

example = Example(predicted, reference)

Alternatively, the reference Doc with the gold-standard annotations can be

created from a dictionary with keyword arguments specifying the annotations,

like tags or entities. Using the Example object and its gold-standard

annotations, the model can be updated to learn a sentence of three words with

their assigned part-of-speech tags.

About the tag map

The tag map is part of the vocabulary and defines the annotation scheme. If you're training a new language model, this will let you map the tags present in the treebank you train on to spaCy's tag scheme:

tag_map = {"N": {"pos": "NOUN"}, "V": {"pos": "VERB"}} vocab = Vocab(tag_map=tag_map)

words = ["I", "like", "stuff"]

tags = ["NOUN", "VERB", "NOUN"]

predicted = Doc(nlp.vocab, words=words)

example = Example.from_dict(predicted, {"tags": tags})

Here's another example that shows how to define gold-standard named entities.

The letters added before the labels refer to the tags of the

BILUO scheme – O is a token

outside an entity, U an single entity unit, B the beginning of an entity,

I a token inside an entity and L the last token of an entity.

doc = Doc(nlp.vocab, words=["Facebook", "released", "React", "in", "2014"])

example = Example.from_dict(doc, {"entities": ["U-ORG", "O", "U-TECHNOLOGY", "O", "U-DATE"]})

As of v3.0, the Example object replaces the GoldParse class.

It can be constructed in a very similar way, from a Doc and a dictionary of

annotations:

- gold = GoldParse(doc, entities=entities)

+ example = Example.from_dict(doc, {"entities": entities})

- Training data: The training examples.

- Text and label: The current example.

- Doc: A

Docobject created from the example text.- Example: An

Exampleobject holding both predictions and gold-standard annotations.- nlp: The

nlpobject with the model.- Optimizer: A function that holds state between updates.

- Update: Update the model's weights.

Of course, it's not enough to only show a model a single example once.

Especially if you only have few examples, you'll want to train for a number of

iterations. At each iteration, the training data is shuffled to ensure the

model doesn't make any generalizations based on the order of examples. Another

technique to improve the learning results is to set a dropout rate, a rate

at which to randomly "drop" individual features and representations. This makes

it harder for the model to memorize the training data. For example, a 0.25

dropout means that each feature or internal representation has a 1/4 likelihood

of being dropped.

begin_training: Start the training and return anOptimizerobject to update the model's weights.update: Update the model with the training examplea.to_disk: Save the updated model to a directory.

### Example training loop

optimizer = nlp.begin_training()

for itn in range(100):

random.shuffle(train_data)

for raw_text, entity_offsets in train_data:

doc = nlp.make_doc(raw_text)

example = Example.from_dict(doc, {"entities": entity_offsets})

nlp.update([example], sgd=optimizer)

nlp.to_disk("/model")

The nlp.update method takes the following arguments:

| Name | Description |

|---|---|

examples |

Example objects. The update method takes a sequence of them, so you can batch up your training examples. |

drop |

Dropout rate. Makes it harder for the model to just memorize the data. |

sgd |

An Optimizer object, which updated the model's weights. If not set, spaCy will create a new one and save it for further use. |

As of v3.0, the Example object replaces the GoldParse class

and the "simple training style" of calling nlp.update with a text and a

dictionary of annotations. Updating your code to use the Example object should

be very straightforward: you can call

Example.from_dict with a Doc and the

dictionary of annotations:

text = "Facebook released React in 2014"

annotations = {"entities": ["U-ORG", "O", "U-TECHNOLOGY", "O", "U-DATE"]}

+ example = Example.from_dict(nlp.make_doc(text), {"entities": entities})

- nlp.update([text], [annotations])

+ nlp.update([example])